1) characteristics of devices used in the network;

2) the network operating system used;

3) the method of physically connecting network nodes with communication channels;

4) the method of propagating signals over the network.

60. For standard Ethernet technologies are used...

1) coaxial cable;

2) linear topology;

3) ring topology;

4) carrier sensing access;

5) forwarding the token

6) fiber optic cable;

61. List ways in which a workstation can be physically connected to the network?

1) using network adapter and cable outlet

2) using a hub

3) using a modem and a dedicated telephone line

4)using the server

62. Local networks are not allowed physically combine using...

1) servers

2) gateways

3) routers

4) concentrators

63. What is the main disadvantage of the ring topology?

1. high network cost;

2. low network reliability;

3. high cable consumption;

4. low noise immunity of the network.

64. For which topology is the statement true: “The failure of a computer does not disrupt the operation of the entire network”?

1) basic star topology

2) basic “bus” topology

3) basic “ring” topology

4) the statement is not true for any of the basic topologies

65. What is the main advantage of the star topology?

1. low network cost;

2. high reliability and controllability of the network;

3. low cable consumption;

4. good network noise immunity.

66. What topology and access method are used in Ethernet networks?

1) bus and CSMA/CD

2) bus and marker transfer

3) ring and marker transfer

4) bus and CSMA/CA

67. What network characteristics are determined by the choice of network topology?

1. equipment cost

2. network reliability

3. subordination of computers in the network

4. network expandability

68. What is the main advantage of the token passing access method?

- no collisions

- simplicity of technical implementation

- low cost of equipment

Stages of data exchange in networked computer systems

1) data transformation in the process of moving from the top level to the bottom1

2) data transformation as a result of moving from the lower level to the upper ones3

3) transportation to the recipient computer2

70. Which protocol is the main one for transmitting hypertext on the Internet?

2) TCP/IP

3) NetBIOS

71. What is the name of a device that provides a domain name upon request based on an IP address and vice versa:

1) DFS server

2) host – computer

3) DNS server

4) DHCP server

72. The DNS protocol establishes correspondence...

1) IP addresses with switch port

2) IP addresses with domain address

3) IP addresses with MAC address

4) MAC addresses with domain address

73. What IP addresses cannot be assigned to hosts on the Internet?

1) 172.16.0.2;

2) 213.180.204.11;

3) 192.168.10.255;

4) 169.254.141.25

A unique 32-bit sequence of binary digits that uniquely identifies a computer on a network is called

1) MAC address

2) URL;

3) IP address;

4) frame;

Which (or which) identifiers are allocated in an IP address using a subnet mask

1) networks

2) network and node

3) node

4) adapter

76. For each server connected to the Internet, the following addresses are set:

1) digital only;

2) domain only;

3) digital and domain;

4) addresses are determined automatically;

77. On network level OSI model interactions...

1) erroneous data is retransmitted;

2) the message delivery route is determined;

3) programs that will carry out interaction are determined;

78. Which protocol is used to determine the physical MAC address of a computer corresponding to its IP address?

OSI model includes _____ levels of interaction

1) seven

2) five

3) four

4) six

80. What class of network does an organization with 300 computers need to register to access the Internet?

81. What makes it different TCP protocol from the UDP protocol?

1) uses ports when working

2) establishes a connection before transmitting data

3) guarantees delivery of information

82. Which of the following protocols are located at the network layer of the TCP/IP stack?

Reliability and safety

One of the initial goals of creating distributed systems, which include computer networks, was to achieve greater reliability compared to individual computers.

It is important to distinguish several aspects of reliability. For technical devices reliability indicators such as mean time between failures, probability of failure, and failure rate are used. However, these indicators are suitable for assessing the reliability of simple elements and devices that can only be in two states - operational or inoperative. Complex systems consisting of many elements, in addition to states of operability and inoperability, may also have other intermediate states that these characteristics do not take into account. In this regard, a different set of characteristics is used to assess the reliability of complex systems.

Availability or availability refers to the percentage of time that a system can be used. Availability can be improved by introducing redundancy into the system structure: key elements of the system must exist in several copies so that if one of them fails, the others will ensure the functioning of the system.

For a system to be considered highly reliable, it must at least have high availability, but this is not enough. It is necessary to ensure the safety of data and protect it from distortion. In addition, data consistency (consistency) must be maintained, for example, if several copies of data are stored on several file servers to increase reliability, then their identity must be constantly ensured.

Since the network operates on the basis of a mechanism for transmitting packets between end nodes, one of the characteristic characteristics of reliability is the probability of delivering a packet to the destination node without distortion. Along with this characteristic, other indicators can be used: the probability of packet loss (for any reason - due to a router buffer overflow, due to a checksum mismatch, due to the lack of a workable path to the destination node, etc.), probability distortion of an individual bit of transmitted data, the ratio of lost packets to delivered ones.

Another aspect of overall reliability is safety(security), that is, the ability of the system to protect data from unauthorized access. This is much more difficult to do in a distributed system than in a centralized one. In networks, messages are transmitted over communication lines, often passing through public premises in which means of listening to the lines may be installed. Another vulnerability may be personal computers left unattended. In addition, there is always a potential threat of hacking the network’s security from unauthorized users if the network has access to global public networks.

Another reliability characteristic is fault tolerance. In networks, fault tolerance refers to the ability of a system to hide the failure of its individual elements from the user. For example, if copies of a database table are stored simultaneously on several file servers, then users may simply not notice the failure of one of them. In a fault-tolerant system, the failure of one of its elements leads to a slight decrease in the quality of its operation (degradation), and not to a complete stop. So, if one of the file servers fails in the previous example, only the database access time increases due to a decrease in the degree of query parallelization, but in general the system will continue to perform its functions.

“UDC 621.396.6 RELIABILITY OF A LOCAL COMPUTING NETWORK RELIABILITY OF A LOCAL COMPUTING NETWORK BASED ON A THIN CLIENT AND WORKERS...”

Reliability and quality of complex systems. No. 4, 2013

UDC 621.396.6

RELIABILITY OF LOCAL COMPUTER NETWORK

S. N. Polessky, M. A. Karapuzov, V. V. Zhadnov

RELIABILITY OF A LOCAL COMPUTER NETWORK BASED ON THIN CLIENT AND WORKSTATIONS

BASED ON THIN CLIENT AND WORKSTATIONS

S. N. Polessky, M. A. Karapuzov, V. V. Zhadnov

The development of local area networks (LANs) faces two prospects: continue to design LANs, where the subscribers are traditional “workstations” (PCs), or instead of PCs, use so-called “thin clients” (hereinafter will be used as a synonym for “terminal terminals”). station").

Currently, the term “thin client” is increasingly used, when this term means a fairly wide range of devices and programs from the point of view of system architecture, which are united by a common property: the ability to work in terminal mode.

The advantage of a PC over a thin client is its independence from the presence of a working network - information will be processed even at the moment of its failure, since in the case of a PC, information is processed directly by the stations themselves.

If you use a thin client, you need a terminal server. But at the same time, the thin client has a minimal hardware configuration, instead of hard drive To load a local specialized operating system (OS), DOM is used (DiskOnModule - a module with an IDE connector, flash memory and a chip that implements the logic of a regular hard drive, which is defined in the BIOS as a regular HDD, only its size is usually 2–3 times smaller).

In some system configurations, the thin client loads the operating system over the network from the server using the PXE, BOOTP, DHCP, TFTP, and Remote Installation Services (RIS) protocols. Minimal use of hardware resources is the main advantage of a thin client over a PC.

In this regard, the question arises: what is better to use for designing a LAN from a reliability point of view - a thin client or traditional PCs?

To answer this question, we will compare the reliability indicators of a typical LAN circuit built using the “star” topology for two options for its implementation. In the first version, the LAN is built on the basis of thin clients, and in the second, on the basis of a PC. To simplify the assessment of LAN reliability indicators, consider a small corporate network of a department (enterprise), consisting of 20–25 standard devices.

Let’s assume that the department under study is engaged in design work using appropriate software. A typical PC-based LAN of such a department should contain workstations, a server, and a printer. All devices are connected to the network through a switch (see Fig. 1).

– – –

A typical LAN based on a thin client includes terminal stations, a server, a printer, and a terminal server, which provides users with access through the thin client to the resources necessary for work. All devices are connected to the network through a switch (Fig. 2).

Rice. 2. Connection diagram of devices in a LAN based on terminal stations

Let us formulate the failure criteria. To do this, it is necessary to determine which element failures are critical for the performance of specified network functions. Let there be 20 jobs allocated to a department (enterprise), and the workload of the department allows you to leave two jobs in reserve.

The remaining 18 workstations are used continuously throughout the working day (8 hours a day).

Based on this, the failure of more than two PCs (terminal stations) will lead to the failure of the entire LAN. A server failure, a failure of one of the terminal servers (for a thin client-only LAN), and a switch failure also result in a failure of the entire LAN. Printer failure is not critical, since the department's tasks are not directly related to its continuous use and therefore it is not taken into account when assessing reliability. The failure of the switching wire network is also not taken into account, since in both LAN implementation options the set of connections is almost the same, and the failure rate is negligible.

Failures of such PC elements as external storage device, monitor, keyboard, mouse, video card, motherboard, processor, cooling system, power supply, random access memory are critical for the PC and lead to its failure.

Taking into account the operating conditions of the LAN and failure criteria, we will construct reliability block diagrams (RSDs) for different levels of disaggregation.

On upper level a set of devices is considered, the SSN of which is a “serial connection” group of three blocks (switch, server, switching network) and a redundant group (working group of terminals or workstations).

Structural diagrams of reliability are shown in Fig. 3 (for PC-based LAN) and in Fig. 4 (for LAN based on a thin client).

– – –

At the next level of disaggregation, a set of work/terminal stations is considered, the SSN of which is a group of “sliding redundancy n of m” of twenty blocks (18 main work/terminal stations are backed up by two stations, each of which can replace any failed main one).

At the lower level, a set of elements is considered workstation, the SCH of which is a group of “serial connection” of ten blocks (monitor, processor, RAM, hard drive, keyboard, mouse, power supply, motherboard, cooling system, video card).

Calculation of LAN reliability is carried out in two stages:

– firstly, the reliability of the elements separately is calculated (determined),

– secondly, the reliability of the LAN as a whole is calculated.

A typical diagram for calculating the reliability of a LAN, performed in IDEF0 notations, is presented in Fig. 5.

– – –

In Fig. Figure 6 shows a histogram constructed according to the data in Table. 1, which shows the distribution of average time between failures of the RS elements and the switch.

MTBF, thousand hours

– – –

In Fig. Figure 7 shows a histogram of the distribution of average time between failures of the LAN components.

MTBF, thousand hours Fig. 7. Histogram of distribution of average time between failures of LAN components Technological basis for increasing the reliability and quality of products

– – –

From the table 3 shows that the availability factor for a PC-based LAN is less than that of a similar thin client-based LAN. The mean time between failures for a thin client-based LAN is greater than that of a PC-based LAN, and the mean time to recovery is lower. The above comparison shows that the implementation of a LAN based on 20 terminal stations, two of which are in reserve, turns out to be more reliable than its implementation based on workstations.

Summarizing the results of the analysis, it can be argued that a more reliable type is a LAN based on terminal stations. From a practical point of view, this shows that the transition to creating a LAN based on a thin client is also advisable from a reliability standpoint.

The introduction of LANs consisting of terminal stations in combination with cloud software can significantly affect the increase in the level of automation, quality and reliability of enterprise operations.

Bibliography

1. GOST 27.009-89. Reliability in technology. Basic concepts. Terms and Definitions. – M.: Publishing house of standards, 1990. – 37 p.

2. GOST R51901.14-2005 (IEC 61078:1991). Reliability block diagram method. – M.: Standartinform, 2005. – 38 p.

3. OST 4G 0.012.242-84. Methodology for calculating reliability indicators. – M., 1985. – 49 p.

5. Forecasting the quality of EMU during design: textbook. allowance / V.V. Zhadnov, S.N. Polessky, S.E. Yakubov, E.M. Gamilova. – M.: SINC, 2009. – 191 p.

6. Zhadnov, V.V. Assessing the quality of components computer equipment. / V.V. Zhadnov, S.N. Polessky, S.E. Yakubov // Reliability. – 2008. – No. 3. – P. 26–35.

Lecture 13. Requirements for computer networks

The most important indicators of network performance are discussed: performance, reliability and security, extensibility and scalability, transparency, support different types traffic, quality of service characteristics, manageability and compatibility.

Keywords: performance, response time, average, instantaneous, maximum, total throughput, transmission delay, transmission delay variation, reliability indicators, mean time between failures, probability of failure, failure rate, availability, availability factor, data integrity, consistency, data consistency, probability of data delivery, security, fault tolerance, extensibility, scalability, transparency, multimedia traffic, synchronicity, reliability, delays, data loss, computer traffic, centralized control, monitoring, analysis, network planning, Quality of Service (QoS), delays packet transmission, level of packet loss and distortion, "best effort" service, "with maximum effort" service, "as possible".

Compliance with standards is just one of many requirements for modern networks. In this section we will focus on some others, no less important.

The most general wish that can be expressed regarding the operation of a network is that the network perform the set of services for which it is intended to provide: for example, providing access to file archives or pages of public Internet Web sites, exchanging e-mail within an enterprise or on a global scale , interactive exchange voice messages IP telephony, etc.

All other requirements - performance, reliability, compatibility, manageability, security, extensibility and scalability - are related to the quality of this main task. And although all of the above requirements are very important, often the concept of “quality of service” (Quality of Service, QoS) of a computer network is interpreted more narrowly: it includes only the two most important characteristics of the network - performance and reliability.

Performance

Potentially high performance– this is one of the main advantages of distributed systems, which include computer networks. This property is ensured by the fundamental, but, unfortunately, not always practically realizable possibility of distributing work among several computers on the network.

Key network performance characteristics:

reaction time;

traffic transmission speed;

throughput;

transmission delay and transmission delay variation.

Network response time is an integral characteristic of network performance from the user's point of view. This is precisely the characteristic that a user has in mind when he says: “The network is slow today.”

In general, response time is defined as the interval between the occurrence of a user request for any network service and the receipt of a response to it.

Obviously, the value of this indicator depends on the type of service that the user is accessing, which user is accessing which server, and also on current state network elements - load on segments, switches and routers through which the request passes, server load, etc.

Therefore, it makes sense to also use a weighted average estimate of network response time, averaging this indicator over users, servers and time of day (on which network load largely depends).

Network response time usually consists of several components. In general, it includes:

request preparation time on the client computer;

the time of transmission of requests between the client and the server through network segments and intermediate communication equipment;

server request processing time;

the time for transmitting responses from the server to the client and the processing time for responses received from the server on the client computer.

Obviously, the user is not interested in decomposing the reaction time into its components - the end result is important to him. However, for a network specialist, it is very important to isolate from the total reaction time the components corresponding to the stages of network data processing itself - data transfer from client to server through network segments and communication equipment.

Knowing the network components of response time allows you to evaluate the performance of individual network elements, identify bottlenecks and, if necessary, upgrade the network to improve its overall performance.

Network performance can also be characterized by traffic transmission speed.

The traffic transmission speed can be instantaneous, maximum and average.

the average speed is calculated by dividing the total volume of data transferred by the time of their transmission, and a sufficiently long period of time is selected - an hour, a day or a week;

instantaneous speed differs from average speed in that a very short period of time is selected for averaging - for example, 10 ms or 1 s;

maximum speed is the highest speed recorded during the observation period.

Most often, when designing, configuring and optimizing a network, indicators such as average and maximum speed are used. The average speed at which traffic is processed by an individual element or the network as a whole makes it possible to evaluate the operation of the network over a long period of time, during which, due to the law of large numbers, peaks and valleys in traffic intensity compensate each other. The maximum speed allows you to evaluate how the network will cope with peak loads characteristic of special periods of operation, for example in the morning, when enterprise employees log on to the network almost simultaneously and access shared files and databases. Typically, when determining the speed characteristics of a certain segment or device, the traffic of a specific user, application or computer is not highlighted in the transmitted data - the total amount of transmitted information is calculated. However, for a more accurate assessment of service quality, such detail is desirable, and in Lately Network management systems increasingly allow this to be done.

Passportability– the maximum possible speed of traffic processing, determined by the technology standard on which the network is built. Bandwidth reflects the maximum possible amount of data transmitted by a network or part of it per unit of time.

Bandwidth is no longer, like response time or speed of data passage through the network, a user characteristic, since it speaks of the speed of internal network operations - the transfer of data packets between network nodes through various communication devices. But it directly characterizes the quality of the network’s main function—message transportation—and therefore is more often used in analyzing network performance than response time or speed.

Throughput is measured in either bits per second or packets per second.

Network throughput depends both on the characteristics of the physical transmission medium (copper cable, optical fiber, twisted pair) and on the adopted data transmission method (Ethernet, FastEthernet, ATM technology). Bandwidth is often used as a characteristic not so much of a network as of the actual technology on which the network is built. The importance of this characteristic for network technology is shown, in particular, by the fact that its meaning sometimes becomes part of the name, for example, 10 Mbit/s Ethernet, 100 Mbit/s Ethernet.

Unlike response time or traffic transmission speed, throughput does not depend on network congestion and has a constant value determined by the technologies used in the network.

In different parts of a heterogeneous network, where several different technologies, throughput may vary. To analyze and configure a network, it is very useful to know data on the throughput of its individual elements. It is important to note that due to the sequential nature of data transmission between different network elements, the total throughput of any composite path in the network will be equal to the minimum of the throughputs of the constituent elements of the route. To improve the throughput of a composite path, you need to focus on the slowest elements first. Sometimes it is useful to operate with the total network capacity, which is defined as the average amount of information transmitted between all network nodes per unit of time. This indicator characterizes the quality of the network as a whole, without differentiating it by individual segments or devices.

Transmission delay is defined as the delay between the moment data arrives at the input of any network device or part of the network and the moment it appears at the output of this device.

This performance parameter is close in meaning to the network response time, but differs in that it always characterizes only the network stages of data processing, without delays in processing by the end nodes of the network.

Typically, network quality is characterized by the maximum transmission delay and delay variation. Not all types of traffic are sensitive to transmission delays, at least to those delays that are typical for computer networks - usually delays do not exceed hundreds of milliseconds, less often - several seconds. This order of magnitude delays for packets generated by a file service, e-mail service, or print service has little impact on the quality of those services from the network user's point of view. On the other hand, the same delays of packets carrying voice or video data can lead to a significant decrease in the quality of information provided to the user - the appearance of an “echo” effect, the inability to understand some words, vibration of the image, etc.

All of these network performance characteristics are quite independent. While network throughput is a constant, the speed of traffic can vary depending on network load, without, of course, exceeding the limit set by the throughput. So, in a single-segment 10 Mbit/s Ethernet network, computers can exchange data at speeds of 2 Mbit/s and 4 Mbit/s, but never at 12 Mbit/s.

Bandwidth and transmission delays are also independent parameters, so a network may have, for example, high throughput, but introduce significant delays in the transmission of each packet. An example of such a situation is provided by a communication channel formed by a geostationary satellite. The throughput of this channel can be very high, for example 2 Mbit/s, while the transmission delay is always at least 0.24 s, which is determined by the speed of propagation of the electrical signal (about 300,000 km/s) and the length of the channel (72,000 km) .

Reliability and safety

One of the initial goals of creating distributed systems, which include computer networks, was to achieve greater reliability compared to individual computers.

It is important to distinguish several aspects of reliability.

For relatively simple technical devices, the following reliability indicators are used:

Mean time between failures;

Probability of failure;

Failure rate.

However, these indicators are suitable for assessing the reliability of simple elements and devices that can only be in two states - operational or inoperative. Complex systems consisting of many elements, in addition to states of operability and inoperability, may also have other intermediate states that these characteristics do not take into account.

To assess the reliability of complex systems, another set of characteristics is used:

Availability or readiness rate;

Data security;

Consistency (consistency) of data;

Probability of data delivery;

Safety;

Fault tolerance.

Availability or availability refers to the period of time during which a system can be used. Availability can be increased by introducing redundancy into the system structure: key elements of the system must exist in several copies so that if one of them fails, the others will ensure the functioning of the system.

To computer system could be considered highly reliable, it should at least have high availability, but this is not enough. It is necessary to ensure the safety of data and protect it from distortion. In addition, data consistency must be maintained; for example, if multiple copies of data are stored on multiple file servers to increase reliability, their identity must be ensured at all times.

Since the network operates on the basis of a mechanism for transmitting packets between end nodes, one of the reliability characteristics is the probability of delivering a packet to the destination node without distortion. Along with this characteristic, other indicators can be used: the probability of packet loss (for any reason - due to overflow of the router buffer, checksum mismatch, lack of a workable path to the destination node, etc.), the probability of corruption of a single bit of transmitted data, ratio of the number of lost and delivered packets.

Another aspect of overall reliability is security, which is the system's ability to protect data from unauthorized access. This is much more difficult to do in a distributed system than in a centralized one. In networks, messages are transmitted over communication lines, often passing through public premises in which means of listening to the lines may be installed. Another vulnerability can be personal computers left unattended. In addition, there is always the potential threat of hacking network security from unauthorized users if the network has access to global public networks.

Another reliability characteristic is fault tolerance. In networks, fault tolerance refers to the ability of a system to hide the failure of its individual elements from the user. For example, if copies of a database table are stored simultaneously on multiple file servers, users may simply not notice that one of them fails. In a fault-tolerant system, the failure of one of its elements leads to a slight decrease in the quality of its operation (degradation), and not to a complete stop. So, if one of the file servers fails in the previous example, only the database access time increases due to a decrease in the degree of query parallelization, but in general the system will continue to perform its functions.

Extensibility and scalability

The terms "extensibility" and ";scalability"; sometimes used as synonyms, but this is incorrect - each of them has a clearly defined independent meaning.

Extensibility(extensibility) | Scalability(scalability) |

Possibility of relatively easy addition of individual network elements | Ability to add (not necessarily easy) network elements |

Ease of system expansion can be ensured within certain very limited limits | Scalability means that the network can be expanded within a very wide range, while maintaining the consumer properties of the network |

Extensibility(extensibility) means the ability to relatively easily add individual network elements (users, computers, applications, services), increase the length of network segments and replace existing equipment with more powerful ones. It is fundamentally important that the ease of system expansion can sometimes be ensured within very limited limits. For example, an Ethernet local network, built on the basis of one segment of thick coaxial cable, has good expandability in the sense that it allows you to easily connect new stations. However, such a network has a limit on the number of stations - it should not exceed 30–40. Although the network allows physical connection to the segment and more stations (up to 100), but this often results in a sharp decrease in network performance. The presence of such a limitation is a sign of poor system scalability with good extensibility.

Scalability(scalability) means that the network allows you to increase the number of nodes and the length of connections within a very wide range, while the network performance does not deteriorate. To ensure network scalability, it is necessary to use additional communication equipment and structure the network in a special way. For example, a multi-segment network built using switches and routers and having a hierarchical structure of connections has good scalability. Such a network can include several thousand computers and at the same time provide each network user with the required quality of service.

Transparency

Network transparency is achieved when the network appears to users not as many individual computers interconnected by a complex system of cables, but as a single traditional computing machine with a time-sharing system. The famous slogan of Sun Microsystems "The network is the computer"; – speaks precisely of such a transparent network.

Transparency can be achieved at two different levels - at the user level and at the programmer level. At the user level, transparency means that the user uses the same commands and familiar procedures to work with remote resources as he does to work with local resources. At the program level, transparency means that an application requires the same calls to access remote resources as it does to access local resources. Transparency at the user level is easier to achieve because all the procedural details associated with the distributed nature of the system are hidden from the user by the programmer who creates the application. Transparency at the application level requires hiding all distribution details using the network operating system.

Transparency– the ability of a network to hide details of its internal structure from the user, which simplifies work on the network.

The network must hide all the features of operating systems and differences in computer types. A Macintosh user must be able to access resources supported by a UNIX system, and a UNIX user must be able to share information with Windows users 95. The vast majority of users do not want to know anything about internal file formats or UNIX command syntax. The IBM 3270 terminal user must be able to exchange messages with network users personal computers without having to delve into the secrets of difficult-to-remember addresses.

The concept of transparency applies to various aspects of the network. For example, location transparency means that the user is not required to know the location of software and hardware resources such as processors, printers, files, and databases. The resource name should not include information about its location, so names like mashinel:prog.c or \\ftp_serv\pub are not transparent. Likewise, movement transparency means that resources can move freely from one computer to another without changing names. Another possible aspect of transparency is the transparency of parallelism, which lies in the fact that the process of parallelizing calculations occurs automatically, without the participation of a programmer, while the system itself distributes parallel branches of the application among processors and network computers. Currently, it cannot be said that the property of transparency is fully inherent in many computer networks; it is rather a goal that developers of modern networks strive for.

Supports different types of traffic

Computer networks were originally intended for sharing access to computer resources: files, printers, etc. The traffic created by these traditional computer network services has its own characteristics and is significantly different from message traffic in telephone networks or, for example, in networks cable television. However, in the 90s, multimedia data traffic, representing speech and video in digital form, penetrated into computer networks. Computer networks began to be used for organizing video conferencing, training based on videos, etc. Naturally, the dynamic transmission of multimedia traffic requires other algorithms and protocols, and, accordingly, other equipment. Although the share of multimedia traffic is still small, it has already begun to penetrate both global and local networks, and this process will obviously continue actively.

The main feature of the traffic generated during the dynamic transmission of voice or image is the presence of strict requirements for the synchronization of transmitted messages. For high-quality reproduction of continuous processes, such as sound vibrations or changes in light intensity in a video image, it is necessary to obtain measured and encoded signal amplitudes at the same frequency with which they were measured on the transmitting side. If messages are delayed, distortion will occur.

At the same time, computer data traffic is characterized by an extremely uneven intensity of messages entering the network in the absence of strict requirements for the synchronization of the delivery of these messages. For example, access by a user working with text on a remote disk generates a random flow of messages between the remote and local computers, depending on the user’s actions, and delivery delays within certain (quite wide from a computer point of view) limits have little effect on the quality of service for the network user. All computer communication algorithms, corresponding protocols and communication equipment were designed for precisely this “pulsating” signal. the nature of the traffic, therefore the need to transmit multimedia traffic requires fundamental changes in both protocols and equipment. Today, almost all new protocols provide support for multimedia traffic to one degree or another.

Particularly difficult is the combination of traditional computer and multimedia traffic in one network. Transmitting exclusively multimedia traffic over a computer network, although associated with certain difficulties, is less of a hassle. But the coexistence of two types of traffic with opposing requirements for quality of service is a much more difficult task. Typically, computer network protocols and equipment classify multimedia traffic as optional, so the quality of its service leaves much to be desired. Today, great effort is spent on creating networks that do not infringe on the interests of one type of traffic. The closest to this goal are networks based on ATM technology, the developers of which initially took into account the case of coexistence different types traffic on the same network.

Controllability

Ideally, network management tools are a system that monitors, controls and manages every element of the network - from the simplest to the most complex devices, and such a system views the network as a single whole, and not as a disparate collection of individual devices.

Controllability network implies the ability to centrally monitor the status of the main elements of the network, identify and solve problems that arise during network operation, perform performance analysis and plan network development.

A good management system monitors the network and, when it detects a problem, initiates some action, corrects the situation, and notifies the administrator of what happened and what steps were taken. At the same time, the control system must accumulate data on the basis of which network development can be planned. Finally, the control system must be independent of the manufacturer and have a user-friendly interface that allows you to perform all actions from one console.

While solving tactical problems, administrators and technical staff are faced with the daily challenges of ensuring network functionality. These tasks require quick solution, network maintenance personnel must respond promptly to reports of faults coming from users or automatic network management tools. Over time, common performance, network configuration, failure handling, and data security issues become apparent and require a strategic approach, i.e., network planning. Planning, in addition, includes forecasting changes in user requirements for the network, issues of using new applications, new network technologies and so on.

The need for a management system is especially pronounced in large networks: corporate or global. Without a management system, such networks require the presence of qualified operating specialists in every building in every city where network equipment is installed, which ultimately leads to the need to maintain a huge staff of maintenance personnel.

Currently, there are many unsolved problems in the field of network management systems. There are clearly not enough truly convenient, compact and multi-protocol network management tools. Most existing tools do not manage the network at all, but merely monitor its operation. They monitor the network, but do not take active actions if something has happened or might happen to the network. There are few scalable systems capable of serving both department-scale networks and enterprise-scale networks - many systems manage only individual network elements and do not analyze the network’s ability to perform high-quality data transfer between end users.

Compatibility

Compatibility or integrability means that the network can include a variety of software and Hardware, that is, it can coexist different operating systems supporting different communication protocol stacks, and run hardware and applications from different manufacturers. A network consisting of different types of elements is called heterogeneous or heterogeneous, and if a heterogeneous network works without problems, then it is integrated. The main way to build integrated networks is to use modules made in accordance with open standards and specifications.

Quality of service

Quality of service Quality of Service (QoS) quantifies the likelihood that a network will transmit a given flow of data between two nodes according to the needs of an application or user.

For example, when transmitting voice traffic through a network, quality of service most often means guarantees that voice packets will be delivered by the network with a delay of no more than N ms, while the delay variation will not exceed M ms, and these characteristics will be maintained by the network with a probability of 0.95 at a certain time interval. That is, for an application that carries voice traffic, it is important that the network guarantees compliance with this particular set of quality of service characteristics listed above. The file service needs guarantees of average bandwidth and its expansion at short intervals to some maximum level for fast transmission of ripples. Ideally, the network should guarantee specific quality of service parameters formulated for each individual application. However, for obvious reasons, the QoS mechanisms being developed and already existing are limited to solving a simpler problem - guaranteeing certain average requirements specified for the main types of applications.

Most often, the parameters that appear in various definitions of quality of service regulate the following network performance indicators:

Bandwidth;

Packet transmission delays;

Level of packet loss and distortion.

Quality of service is guaranteed for some data stream. Recall that a data flow is a sequence of packets that have some common characteristics, for example, the address of the source node, information identifying the type of application (TCP/UDP port number), etc. Concepts such as aggregation and differentiation apply to flows. Thus, a data stream from one computer can be represented as a collection of streams from different applications, and streams from computers of one enterprise are aggregated into one data stream for a subscriber of some service provider.

Quality of service mechanisms do not create capacity on their own. The network cannot give more than what it has. So the actual capacity of communication channels and transit communication equipment are network resources that are Starting point for the operation of QoS mechanisms. QoS mechanisms only manage the distribution of available bandwidth according to application requirements and network settings. The most obvious way to reallocate network bandwidth is to manage packet queues.

Since data exchanged between two end nodes passes through a number of intermediate network devices such as hubs, switches and routers, QoS support requires the interaction of all network elements along the traffic path, that is, end-to-end. (";end-to-end";, ";e2e";). Any QoS guarantee is only as true as the weakest one. element in the chain between sender and recipient. Therefore, you need to clearly understand that QoS support is only in one network device, even in the backbone, can only slightly improve the quality of service or not affect the QoS parameters at all.

The implementation of QoS support mechanisms in computer networks is a relatively new trend. For a long time, computer networks existed without such mechanisms, and this is mainly due to two reasons. First, most applications running on the network were "light-demanding," meaning that for such applications, packet delays or average throughput variations over a wide enough range did not result in significant loss of functionality. Examples of "low-demand" applications are the most common applications in 1980s networks: email or remote file copying.

Second, the bandwidth itself of 10 Mbit Ethernet networks was not in short supply in many cases. Thus, a shared Ethernet segment, to which 10-20 computers were connected, occasionally copying small text files, the volume of which does not exceed several hundred kilobytes, allowed the traffic of each pair of interacting computers to cross the network as quickly as required by the applications that generated this traffic.

As a result, most networks operated with the quality of transport service that met the needs of the applications. True, these networks did not provide any guarantees regarding the control of packet delays or the throughput with which packets are transmitted between nodes, within certain limits. Moreover, during temporary network overloads, when a significant part of computers simultaneously began to transfer data at maximum speed, delays and throughput became such that applications would fail - they were too slow, with session breaks, etc.

There are two main approaches to ensuring network quality. The first is that the network guarantees the user compliance with a certain numerical value of the quality of service indicator. For example, frame relay and ATM networks can guarantee the user a given level of throughput. In the second approach (best effort), the network tries to serve the user as efficiently as possible, but does not guarantee anything.

The transport service provided by such networks was called “best effort”, that is, the service “with maximum effort” (or ";as possible";). The network tries to process incoming traffic as quickly as possible, but does not provide any guarantees regarding the result. Examples include most technologies developed in the 80s: Ethernet, Token Ring, IP, X.25. Service "with maximum effort" is based on some fair algorithm for processing queues that arise during network congestion, when for some time the rate of packets entering the network exceeds the rate of forwarding of these packets. In the simplest case, the queue processing algorithm treats packets from all flows as equal and advances them in the order of arrival (First In – First Out, FIFO). In the event that the queue becomes too large (does not fit in the buffer), the problem is solved by simply discarding new incoming packets.

It is obvious that the service "with best efforts" provides acceptable quality of service only in cases where network performance far exceeds average needs, that is, it is redundant. In such a network, the throughput is sufficient even to support traffic during peak periods. It is also obvious that such a solution is not economical - at least in relation to the throughput of today's technologies and infrastructures, especially for global networks.

However, building networks with excess capacity, while being the most in a simple way ensuring the required level of service quality is sometimes used in practice. For example, some TCP/IP network service providers provide a guarantee of quality service by constantly maintaining a certain level of excess capacity of their backbones compared to customer needs.

In conditions where many mechanisms for supporting quality of service are just being developed, the use of excess bandwidth for these purposes is often the only possible, albeit temporary, solution.

Option 1

1. Which technique will reduce the network response time when the user works with

database server?

transferring the server to the network segment where the majority of clients work

replacing the server hardware platform with a more productive one

reduction in the intensity of client requests

reducing the size of the database

2. Which of the following statements are incorrect?

transmission delay is synonymous with network response time

Bandwidth is synonymous with traffic transmission speed

transmission delay – the reciprocal of throughput

service quality mechanisms cannot increase throughput networks

3. Which of the following characteristics can be attributed to reliability?

computer network?

readiness or readiness rate

reaction time

data safety

data consistency

transmission delay

probability of data delivery

Option 2

1. Data transmission speed measurements were taken on the network from 3 to 5 o'clock. Was determined

average speed. Instantaneous speed measurements were taken at intervals of 10 seconds. Finally, the maximum speed was determined. Which of the statements are true?

average speed is always less than maximum

average speed is always less than instantaneous

instantaneous speed is always less than maximum

2. Which of the following translations of the names of network characteristics from English

Do you agree with Russian?

availability – reliability

fault tolerance - fault tolerance

reliability - readiness

security - secrecy

extensibility - extensibility

scalability - scalability

3. Which of the statements are true?

the network may have high throughput, but introduce significant delays in the transmission of each packet

"best effort" service provides acceptable quality of service only if there is excess network capacity

Option 3

1. Which of the statements are true?

throughput is a constant value for each technology

network bandwidth is equal to the maximum possible data transfer rate

throughput depends on the volume of transmitted traffic

the network may have different meanings throughput in different areas

2. What property, first of all, should a network have in order to be classified as

famous company sloganSunMicrosystems: "A network is a computer"?

high performance

high reliability

high degree of transparency

excellent scalability

3. Which of the statements are wrong?

extensibility and scalability are two names for the same system property

Using QoS you can increase network throughput

For computer traffic, uniformity of data transmission is more important than high network reliability

all statements are true

Required literature

1. V.G. Olifer, NA. Olifer

Computer networks. Principles, technologies, protocols

textbook for students of higher educational institutions,

students in the field of "Informatics and Computing"

technique";

additional literature

1. V.G. Olifer, N.A. Olifer

Network operating systems

Peter, 2001

2. A.Z. Dodd

World of telecommunications. Technology and Industry Overview

Olympus Business, 2002

About project 2

Preface 3

Lecture 1. The evolution of computer networks. Part 1. From Charles Babage's machine to the first global networks 4

Two roots of data networks 4

The emergence of the first computers 5

Software Monitors – 6 First Operating Systems

Multiprogramming 6

Multi-terminal systems - a prototype of the network 8

The first networks are global 8

Legacy of Telephone Networks 9

Lecture 2. The evolution of computer networks. 12

Part 2. From the first local networks to modern network technologies 12

Mini-computers – harbingers of local networks 12

Emergence of standard local network technologies 13

The role of personal computers in the evolution of computer networks 13

New opportunities for local network users 14

Evolution of network operating systems 14

Lecture 3. Basic tasks of building networks 18

Communication between a computer and peripheral devices 18

Communication between two computers 20

Client, redirector and server 21

Task physical transmission data via communication lines 22

Lecture 4. Communication problems between multiple computers 25

Topology of physical connections 25

Addressing network nodes 30

Lecture 5. Switching and multiplexing 35

Generalized commutation problem 35

Definition of information flows 36

Defining routes 37

Notifying the network about the selected route 37

Promotion - flow recognition and switching at each transit node 38

Multiplexing and demultiplexing 39

Shared media 41

Lecture 6. Circuit switching and packet switching. Part 1 44

Different approaches to performing switching 44

Channel switching 45

Packet switching 47

Message switching 50

Lecture 7. Circuit switching and packet switching. Part 2 52

Permanent and dynamic switching 52

Throughput of packet-switched networks 53

Ethernet - An Example of a Standard Packet Switching Technology 55

Datagram transmission 57

Virtual channels in packet-switched networks 58

Lecture 8. Structuring networks 62

Reasons for structuring the transport infrastructure of networks 62

Physical structuring of the network 63

Logical network structuring 65

Lecture 9. Functional roles of computers on the network 71

Multilayer network model 71

Functional roles of computers on the network 72

Peer-to-peer networks 73

Dedicated server networks 74

Network Services and operating system 76

Lecture 10. Convergence of computer and telecommunication networks 79

General structure of a telecommunications network 80

Telecom operator networks 82

Corporate networks 86

Department networks 88

Campus networks 89

Enterprise Networks 89

Lecture 11. OSI Model 93

Multi-level approach 94

Decomposition of the network communication problem 94

Protocol. Interface. Protocol stack 95

Model OSI 97

General characteristics of the OSI 97 model

Physical layer 100

Link level 100

Network layer 102

Transport layer 103

Session level 104

Representative level 104

Application Layer 105

Network-dependent and network-independent levels 105

Lecture 12. Network standardization 109

Concept "; open system"; 109

Modularity and standardization 110

Sources of standards 111

Internet Standards 112

Standard communication protocol stacks 114

informationresources With purposeMay be used exclusively for educational purposes; duplication of information resources is prohibited (2)

Bookalloweduseexclusively V educationalpurposes. Prohibitedreplicationinformationresources With purpose obtaining commercial benefits, as well as other...

May be used exclusively for educational purposes; duplication of information resources is prohibited (4)

TutorialIn the telecommunications library and presented in the form of quotations, alloweduseexclusively V educationalpurposes. Prohibitedreplicationinformationresources With purpose obtaining commercial benefits, as well as other...

May be used exclusively for educational purposes; duplication of information resources is prohibited (5)

List of textbooksIn the telecommunications library and presented in the form of quotations, alloweduseexclusively V educationalpurposes. Prohibitedreplicationinformationresources With purpose obtaining commercial benefits, as well as other...

May be used exclusively for educational purposes; duplication of information resources is prohibited (3)

TutorialIn the telecommunications library and presented in the form of quotations, alloweduseexclusively V educationalpurposes. Prohibitedreplicationinformationresources With purpose obtaining commercial benefits, as well as other...

They work, but not quite as well as we would like. For example, it is not very clear how to restrict access to network drive, every morning the accountant’s printer stops working and there is a suspicion that a virus lives somewhere, because the computer has become unusually slow.

Sound familiar? You are not alone, these are classic signs of network service configuration errors. This is completely fixable; we have helped solve similar problems hundreds of times. Let's call it modernizing IT infrastructure, or increasing the reliability and security of a computer network.

Increasing the reliability of a computer network - who benefits?

First of all, it is needed by a leader who cares about his company. The result of a well-executed project is a significant improvement in network performance and almost complete elimination of failures. For this reason, the money spent on upgrading the network in terms of improving the IT infrastructure and increasing the level of security should not be considered costs, but an investment that will certainly pay off.

A network modernization project is also needed ordinary users, because it allows them to focus on immediate work, and not on solving IT problems.

How we carry out a network modernization project

We are ready to help you figure out the problem, it’s not difficult. Start by calling us and asking for an IT audit. It will show you what causes your daily problems and how to get rid of them. We will do it for you either inexpensively or free of charge.

Essentially, IT audit is part of a network modernization project. As part of an IT audit, we not only inspect the server and workstations, we also understand the connection diagrams network equipment and telephony, but we will also develop a network modernization project plan, determine the project budget both in terms of our work and the necessary equipment or software.

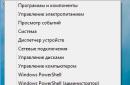

The next stage is the actual implementation of the network modernization project. The main work is carried out on the server, since it is the defining component of the infrastructure. Our task, as part of the network modernization project, is to eliminate not so much the manifestations as the roots of the problems. As a rule, they boil down to approximately the same conceptual infrastructure deficiencies:

a) servers and workstations operate as part of a workgroup, not a domain, as Microsoft recommends for networks with more than five computers. This leads to problems with user authentication, the inability to effectively enter passwords and limit user rights, and the inability to use security policies.

b) network services are configured incorrectly, in particular DNS, and computers stop seeing each other or network resources. For the same reason, most often the network “slows down” for no apparent reason.

c) computers have a variety of anti-virus software installed, which turns protection into a colander. You can work on a slow machine for years without realizing that 80% of its resources are used to attack other computers or send spam. Well, maybe they can also steal your passwords or transfer everything you write to an external server. Unfortunately, this is quite possible; reliable anti-virus protection is an important and necessary part of any network modernization project.

These are the three most common causes of infrastructure problems, and each of them means that they need to be fixed urgently. It is necessary not only to eliminate the problem, but also to competently build a system in order to eliminate the very possibility of their occurrence.

By the way, we try to use the phrase "information system modernization" instead of "network modernization", because we try to look more broadly network problems. In our opinion, Information system should be considered from various points of view, and a professional, when developing a network modernization project, must take into account the following aspects of its operation.

Information security of your company

Talking about information security company, we consider it very important not so much external protection from intrusions via the Internet, but rather streamlining the internal work of employees. Unfortunately, the greatest damage to a company is caused not by unknown hackers, but by those people whom you know by sight, but who might be offended by your decisions or consider the information their property. A manager stealing a customer base or a disgruntled employee copying accounting or management information “just in case” are two of the most common cases of information security violations.

Data Security

Unfortunately, data security is very rarely on the attention list of managers or even many IT specialists. It is believed that once spaceships leave orbit, it is almost impossible to prevent server failure. And the completed network modernization project often does not cover this part of the infrastructure.

We partly agree that it is not always possible to prevent an accident. But any self-respecting IT specialist can and should make sure that the data always remains safe and sound, and the company’s work can be restored within an hour or two from the moment the server breaks down. We consider it our duty during the network modernization project to implement both hardware backup schemes for storage media and data backup using a special scheme that allows you to restore data at the right time and ensure its safety over time. And if the administrator does not understand the meaning of the above words, then, to put it mildly, he is not trustworthy as a professional.

Durability of equipment operation

The long-term performance of servers and workstations is directly related to what they are made of and how they are made. And we try to help you choose equipment that is purchased for a long time and that does not require attention for many years. And as part of a network modernization project, it is very often necessary to upgrade the server’s disk subsystem - unfortunately, it is often forgotten about. This is because the actual service life hard drives does not exceed 4 years, and after this time they must be replaced on the servers. This should be monitored as part of server and computer maintenance, as it is very important for the reliability of data storage.

Maintenance of server and computer systems

We should not forget that even a very properly structured and reliable infrastructure requires competent and attentive maintenance. We believe that IT outsourcing in terms of infrastructure maintenance is a logical continuation of design work. There are a number of companies that have their own IT specialists, but they entrusted us with the task of maintaining server systems. This practice shows high efficiency - the company pays only for server support, taking on low-level tasks. We are responsible for ensuring that security policies and Reserve copy To carry out routine maintenance, we monitor server systems.

Relevance of IT solutions

The world is constantly changing. The IT world is changing twice as fast. And technologies are born and die faster than we would like to spend money on updating them. Therefore, when carrying out a network modernization project, we consider it necessary to introduce not only the newest, but also the most reliable and justified solutions. What everyone is talking about is not always a panacea or a solution to your problem. Often, everything is not at all as it is described. Virtualization and cloud computing are used by thousands of companies, but the implementation of some technologies is not always economically justified. And vice versa - a correctly selected and competently carried out network modernization project and reasonable choices software gives new opportunities in work, saves time and money.

Paid Windows or free Linux? MS SharePoint or "Bitrix: Corporate Portal"? IP telephony or classic? Each product has its own advantages and its own scope of application.

What does your company need? How to complete a network modernization project or introduce a new service without interrupting the company’s operations? How to ensure that the implementation is successful and employees receive best tools for work? Call us, let's figure it out.