The processor is a small part inside system unit. Despite its small size, the most important thing in a computer depends on it - its performance. If you decide to upgrade your PC, then you should take the choice of processor very seriously. Due to the large assortment of processor models on the market, it is difficult to navigate making the right choice. But our rating of processors by performance will help you with this, where we will look at the fastest models of 2015.

9,044 points

- architecture name – Skylake;

- four cores;

- eight streams;

- CPU frequency/turbo mode – 2.8/3.6 GHz;

- eight megabytes of cache;

- unlocked - no;

- integrated graphics – Intel HD Graphics 530;

- power level – 35 W;

- compatible memory – DDR3L 1333/1600 MHz, DDR4 1866/2133 MHz;

- socket – FCLGA1151.

The performance result in the PassMark test is 9,044 points.

9,535 points

- architecture name – Piledriver;

- cores – 8;

- streams – 8;

- performance/accelerated mode – 4.4/4.7 GHz;

- L2 cache – 8 MB;

- L3 cache – 8 MB;

- unlocking - yes;

- frequency overclocking option – up to 5.2 GHz;

- is there any built-in graphics and which ones are not;

- power value – 220 W (you need to cool the processor with water);

- compatible memory – DDR3 with a frequency of up to 1866 MHz;

- socket – AM3+.

In the PassMark test, the processor showed a result of 9.535.

9,809 points

In eighth place in terms of performance in our 2015 ranking is the processor.

- the architecture has a name - Ivy Bridge-E;

- cores – 4;

- streams – 8;

- clock frequency processor (including with acceleration) – 3.7/3.9 GHz;

- cache – 10 MB;

- unlocking - yes;

- the clock frequency accelerates to 4.71 GHz;

- power – 130 W;

- compatible memory – DDR3 1333/1600/1866;

- socket – FCLGA2011.

According to the PassMark test, the processor reached a value of 9.809.

9,949 points

- execution technology – 14 nm;

- architecture – Skylake;

- cores – 4;

- streams – 8;

- overall processor speed – 3.4/4 GHz;

- cache – 8 MB;

- unlocking – no;

- Availability of built-in graphics – Intel HD Graphics 530;

- power – 65 W;

- Its compatible memory is DDR4 1866/2133, DDR3L 1333/1600 @ 1.35V;

- socket – FCLGA1151.

PassMark test result: 9,949 points.

10,260 points

- execution technology – 32 nm;

- architecture – Piledriver;

- cores – eight;

- streams – also eight;

- processor speed/accelerated mode – 4.7/5 GHz;

- L2 cache – 8 MB;

- L3 cache – 8 MB;

- presence of unlocking – yes;

- clock frequency overclocking – up to 5.13 gigahertz;

- Does the processor have integrated graphics - no;

- power – 220 W (requires processor water cooling);

- memory with compatibility – DDR3 with a frequency of up to 1866 MHz;

- socket – AM3+.

The PassMark test ended with a score of 10,260 points.

11,007 points

- execution technology – 14 nm;

- architecture – Skylake;

- cores – only four;

- streams – exactly eight;

- performance/turbo mode – 4/4.2 GHz;

- cache – 8 MB;

- is it unlocked - yes;

- frequency overclocking – up to 4.81 GHz;

- integrated graphics – Intel HD Graphics 530;

- power – 91 W;

- memory compatible – DDR4 1866/2133, DDR3L 1333/1600 @ 1.35V;

- socket – FCLGA1151.

In the PassMark test, the processor scored 11,007 points.

12,077 points

- execution technology – 32 nm;

- architecture in this processor - Sandy Bridge-E;

- cores – 6;

- the model has 12 threads;

- speed standard/turbo mode – 3.2/3.8 GHz;

- cache – 12 MB;

- presence of unlocking – yes;

- the ability to overclock the frequency to 4.68 GHz;

- what kind of integrated graphics is present – Intel HD Graphics 530;

- power – 130 W;

- memory – DDR3 1066/1333/1600;

- socket – FCLGA2011.

According to the PassMark test results, the processor received 12,077 points.

12,995 points

- execution technology – 22 nm;

- architecture name – Haswell-E;

- cores – six;

- streams – 12;

- normal/turbo speed mode – 3.3/3.6 GHz;

- cache – 15 MB;

- unlocking - yes;

- frequency overclocking – up to 4.48 GHz;

- built-in graphics – none;

- power – 140 W;

- compatible DDR4 memory – 1333/1600/2133;

- socket – LGA2011-v3.

In the PassMark test, the processor scored 12,995 points.

13,629 points

In second place in terms of processor performance in 2015 is the processor Intel Core i7 5930K.

- execution technology – 22 nm;

- architecture – Haswell-E;

- cores – 6;

- streams – 12;

- normal/high speed mode – 3.5/3.7 GHz;

- cache – 15 MB;

- unlocked - yes;

- overclocking the processor frequency to 4.75 GHz;

- presence of built-in graphics – no;

- power – 140 W;

- compatible memory – DDR4 1333-1600-2133;

- socket – LGA2011-v3.

The PassMark test showed 13,629 points for this processor.

15,987 points

- execution technology – 22 nm;

- architecture – Haswell-E;

- cores – 8;

- streams – 16;

- clock frequency/turbo mode – 3/3.5 GHz;

- cache – 20 MB;

- unlocked - yes;

- efficiency possible overclocking– up to 4.58 GHz;

- built-in graphics - absent; 7 0

Results of testing central processors using the 2015 version method

62 processors and 80 different configurations

Another year has changed on the calendar, we have prepared new testing methods computer systems, which means that it’s time to sum up the results of processor testing (which is a special case of system testing) in 2015. Last year's results were quite brief - they included the results of only 36 systems, differing only in processors and obtained exclusively using the GPU built into them. This approach, for obvious reasons, left behind a considerable number of platforms that lack integrated graphics, so we decided to modify it a little by sometimes starting to use a discrete video card - at least where it is needed. However, the 2015 tests became to some extent “educational and training” - in 2016 we plan to further refine the approach to testing in order to further bring it closer to real life. But be that as it may, today we will present the results of 62 processors (more precisely, there are 61 different ones, but thanks to cTDP, one of them is worth two). And that's not all: 14 of them were tested with two “video cards” - an integrated GPU (different for everyone) and a discrete Radeon R7 260X. We also tested four processors for the latest LGA1151 platform with two types of memory: DDR4-2133 and DDR3-1600. Thus, the total number of configurations was 80 - this is much less than 149 in the results before last, but for those we collected information for two and a half years, and the “lifetime” of the current test method was approximately eight months, i.e. almost three times less. In addition, the unification of tests for different systems allows you to compare the results with those obtained when testing laptops, all-in-one PCs and other complete systems.

But in this particular article, as mentioned above, we will limit ourselves to processors. More precisely, systems that differ mainly only in processors - it is clear that “testing processors” (especially for different platforms) has long no longer had any other meaning, although for some it is still a revelation :)

Test bench configuration

Since there are many subjects, it is not possible to describe their characteristics in detail. After thinking a little, we decided to abandon the usual short table: anyway, it was becoming too vast, and at the request of the workers, we still included some parameters directly on the diagrams. In particular, since some people are asking to indicate right there the number of cores/modules and computation threads running simultaneously, as well as operating clock frequency ranges, we tried to do just that. If readers like the result, we will save it for other tests in the coming year. The format is simple: “cores/threads; minimum/maximum core clock speed in GHz.”

Well, all other characteristics will have to be looked at in other places - the easiest way is from manufacturers, and prices - in stores. Moreover, for some devices the prices are still indeterminable, since these processors themselves are not available in retail (all BGA models, for example). However, all this information is, of course, also in review articles devoted to these models, and today we are engaged in a slightly different task than the actual study of processors: we collect all the data obtained together and look at the resulting patterns. Including paying attention to the relative position not of processors, but of entire platforms that include them. Because of this, the data in the charts is grouped by platform.

Therefore, all that remains is to say a few words about the environment. As for memory, the fastest one supported by the specification was almost always used. There are two exceptions: what we called “Intel LGA1151 (DDR3)” and Core i5-3427U. For the second, there were simply no suitable DDR3-1600 modules, so it had to be tested with DDR3-1333, and the first - processors for LGA1151, but paired with DDR3-1600, and not the faster (and “main” according to the specifications) DDR4-2133 . The amount of memory in most cases is the same - 8 GB, with the exception of two versions of LGA2011 - here there were 16 GB DDR3 or DDR4, respectively, since the four-channel controller directly provokes the use of a larger amount of RAM. The system drive (Toshiba THNSNH256GMCT with a capacity of 256 GB) is the same for all subjects. As for the video part, everything has already been said above: discrete Radeon R7 260X and built-in video core. The video core was always used when the processor had one (with the exception of the Core i5-655K, since the first version of Intel HD Graphics is no longer supported by modern operating systems), while a discrete video card was used where there was no built-in video. And in some cases - where there is an embedded video: to compare the results.

Testing methodology

To evaluate performance, we used our performance measurement methodology using a benchmark. We normalized all test results relative to the results of the reference system, which last year was the same for laptops and all other computers, in order to make it easier for readers to make the hard work of comparison and selection.

Thus, these normalized results can be compared with those obtained in the same version of the benchmark for other systems (for example, we take it and compare it with desktop platforms). For those who are interested in absolute results, we offer them as a file in Microsoft Excel format.

Video conversion and video processing

As we have noted more than once, in this group a discrete video card allows you to increase performance, but this effect is clearly visible only on older platforms (such as LGA1155), where the power of the integrated GPUs itself was small. Actually, this is the answer - why did they increase it in new generations: and so that there would be no incentive to buy a video card as well :)

The dependence of performance on the number of threads of executed code is also clearly visible here. As a result, we come to a very wide range of results - they differ by more than an order of magnitude, since low-end dual- and quad-core CULV solutions (such as the old Celeron 1037U or the slightly newer, but also outdated Pentium J2900) give only ≈55 points, and the top eight-core Core i7-5960X - all 577. But the main “crush” is unfolding in the mass segment (up to $200): modern Core i5 allows you to increase productivity (relative to the “floor level”) by five times, but further investments only double it. Actually, there is nothing surprising in this: the higher, the more expensive.

As for comparing platforms, then... they don’t need to be compared. Indeed: desktop AMD FM2+ roughly corresponds only to Intel ultrabook processors, and formally, the top-end AM3+ only corresponds to the long-outdated LGA1155. However, Intel's growth from generation to generation is small - even in such well-optimized tasks we can only talk about 15-20% at each step. (This, however, sometimes leads to qualitative changes - for example, the Core i7-6700K has actually caught up with the once top six-core i7-4960X, despite significantly more low price and a simpler device.) In general, it is clear that manufacturers are dealing with completely different issues, and not at all trying to greatly increase the performance of desktop systems.

Video Content Creation

As we have already written more than once, in this group the multi-threaded test in Adobe After Effects CC 2014.1.1 turned us down. For it to work properly, it is recommended to have at least 2 GB for each calculation thread - otherwise the test may “fall out” into single-threaded mode and start working even slower than without using Multiprocessing technology (as Adobe calls it). In general, for full operation with eight threads, 16 GB is desirable random access memory, and an eight-core processor with NT will require a minimum of 32 GB of memory. On most systems, we use 8 GB of memory, which is enough for “eight-thread” systems when using integrated video (if they have it: this is done for desktop Core i7s, but the FX-8000, for example, has it worse), but not discrete. Another stone in the garden of those who still believe in “processor testing” as something independent - in isolation from the platform and other environment: as we see, sometimes attempts to make it equal lead to extremely interesting effects. A “pure” comparison is perhaps only possible within the same platform, and even then not always: the amount of memory required by some programs may depend on the processor itself and not only it. Which just hits the top models hard, because they need more, and “more” in this case means more expensive.

However, in any case, in this group of applications “processor dependence” is less pronounced than in the previous one - there the older Core i5 outperformed the low-voltage surrogates by five times, and here only a little more than four. In addition, a more powerful video card can increase the results noticeably less, although it should not be neglected (if possible) either.

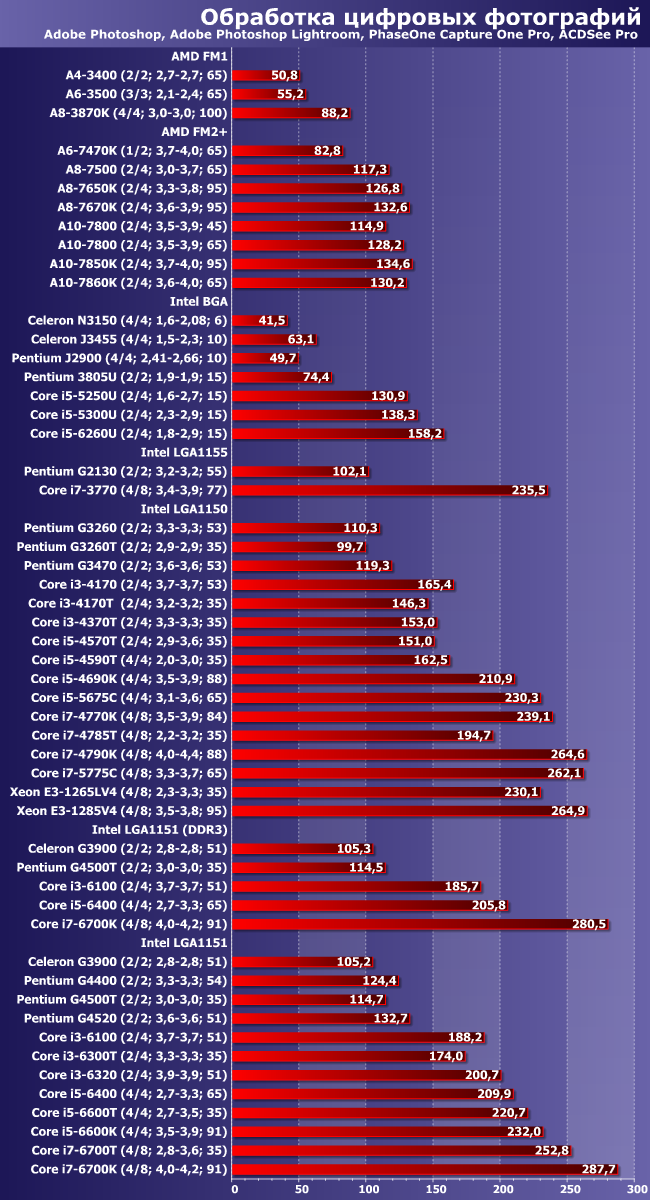

Digital Photo Processing

This group is interesting in that it is absolutely different from the previous ones - in particular, the degree of “multi-threading utilization” is much lower here, which noticeably reduces the range of results obtained, but here are the differences between the Core i5 (we will continue to be tied to this family as upper level mass segment - sales of systems based on more than expensive processors incomparably less) and devices entry level exceeds six times. What is this connected with? Firstly, there is a noticeable dependence of performance on the GPU. First of all, integrated: discrete cannot develop to its full potential due to the need for frequent data transfers. But the power of integrated graphics in low-end and high-end processors differs significantly! And we should not forget that there are still not only quantitative, but also qualitative differences between junior and senior processors - for example, in terms of supported instruction sets. This hits hard both on the younger Intel families (remember that Pentium, for example, still does not support AVX) and on outdated processors from both companies.

Vector graphics

But here is an illustrative example of what modern software can be different. Even if we are talking about, to put it mildly, not the cheapest programs, and not for “home use”. In fact, as we have noted more than once, any serious optimization of Illustrator was last made about 10 years ago, so the program for fast work we need processors that are as similar as possible to the Core 2 Duo: a maximum of a couple of cores with maximum single-threaded performance and without support for new instruction sets. As a result, they look the most advantageous (taking into account the price) modern Pentiums, and higher-end processors may be faster only due to their higher clock speeds. Processors of other architectures feel very bad under such conditions. Actually, even in Intel line Performance-intensive methods such as adding L4 cache are only a hindrance in this case, not a help. However, in any case, trying to greatly speed up work in this program (and similar ones) is not very promising: there is only a fourfold difference between best Core i5 and surrogate platforms speaks for itself.

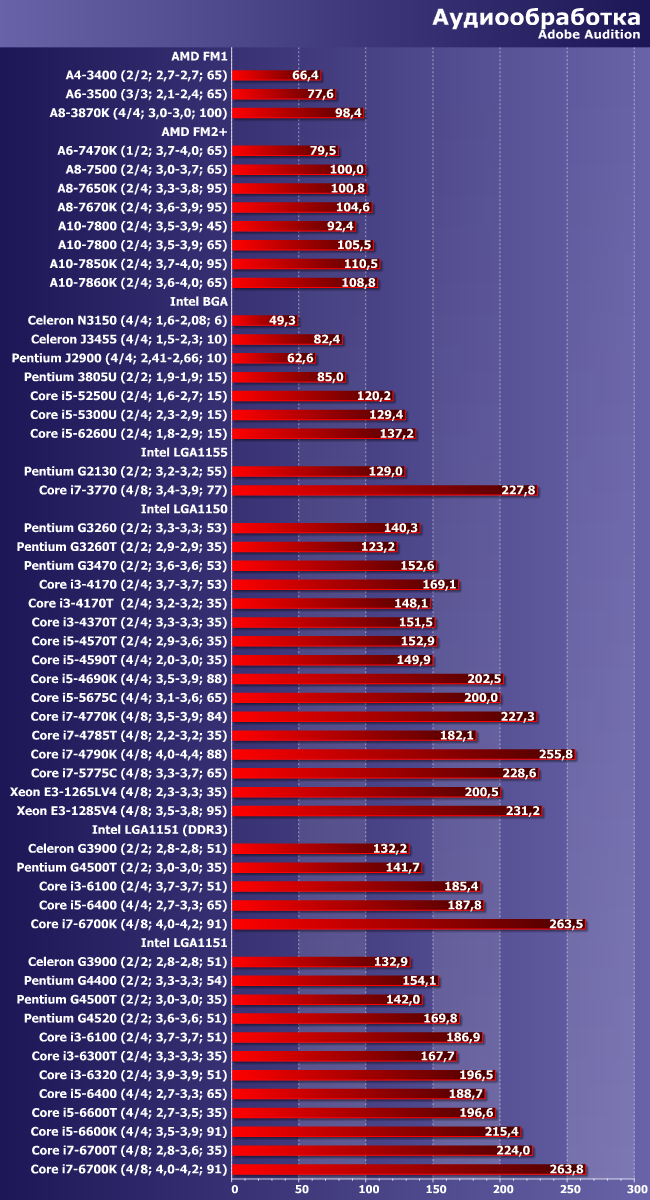

Audio processing

Here is an example of a situation where, it seems, the computational cores are not superfluous, and even the GPU matters, etc., but the difference between the Celeron N3150 (the slowest in this test) and the Core i7 for mass platforms is only about five times . Moreover, a considerable part of it can be attributed to the surrogacy of younger architectures - the very old Celeron 1037U (albeit very limited, but a full-fledged Core) is almost one and a half times faster than the N3150, and the younger desktop Pentiums are three times faster. But further... the more expensive it is, the less effective the amount of the “additional payment for the processor” is. Even within the same architecture, AMD’s “construction equipment” with its “budget multi-threading” in this case is only able to compete with the same Pentium: six threads are faster than four from the same manufacturer, but do not look convincing against the background of only two cores from a competing design.

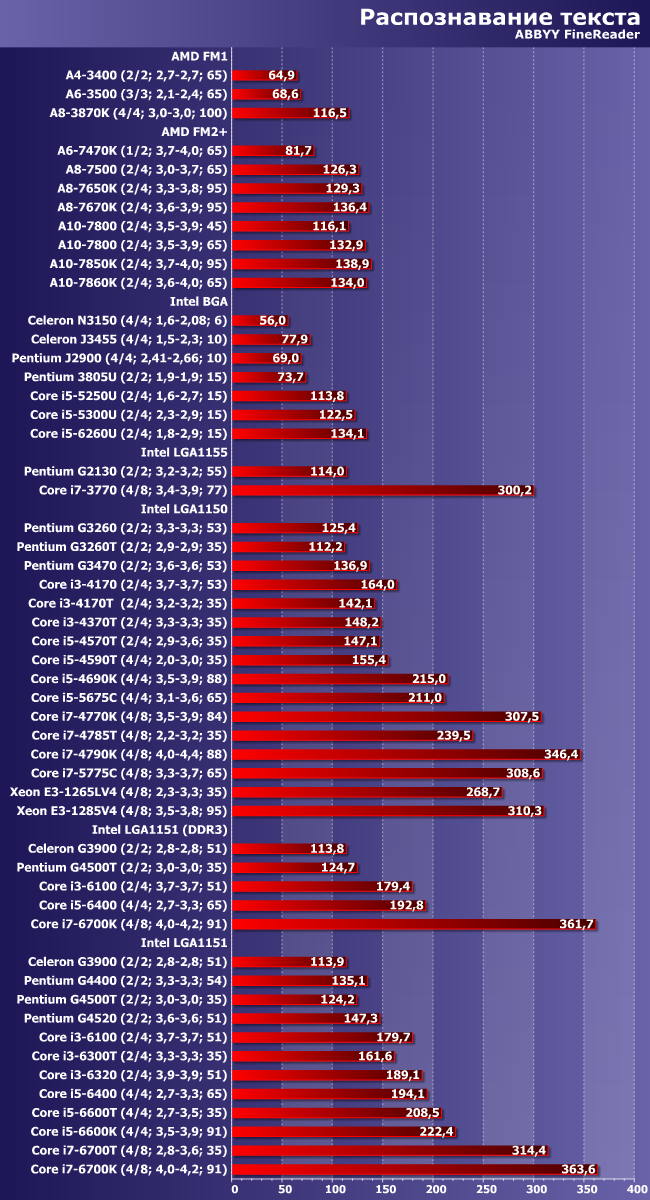

Text recognising

Not at all like in the previous case - here the FX-8000 still easily outperforms any Core i5. Note that AMD positioned them this way at the time of release: between i5 and i7. Including the price. Which, unfortunately, later had to be radically reduced, since the number of such “convenient” tasks turned out to be not too large. However, if the user is specifically interested in them, this provides an opportunity to save a lot of money. Considering, of course, that this family has not been updated for more than three years (in a serious way, in any case), and Intel processors slowly, but growing.

And the problem of scalability is also clearly visible - no matter how good the additional cores and threads are, the more there are, the less effect the increase in number gives. Actually, in the end, you shouldn’t be surprised that this process stopped long ago in mass-produced processors—even more convincing arguments for multi-cores are needed than can still be found. Here are four modern cores - good. Four dual-threaded cores are even better. And then that’s it.

Archiving and unarchiving data

If archiving uses all cores (and additional computing threads) of processors, then the reverse process is single-threaded. Considering that it has to be used more often, this could be considered a nuisance if the process itself were not significantly faster. Yes, in fact, packaging has become a fairly simple operation to pay close attention to when choosing a processor. In any case, this is true for mass-produced desktop models - low-power specialized platforms can still “tinker” with such tasks for a long time.

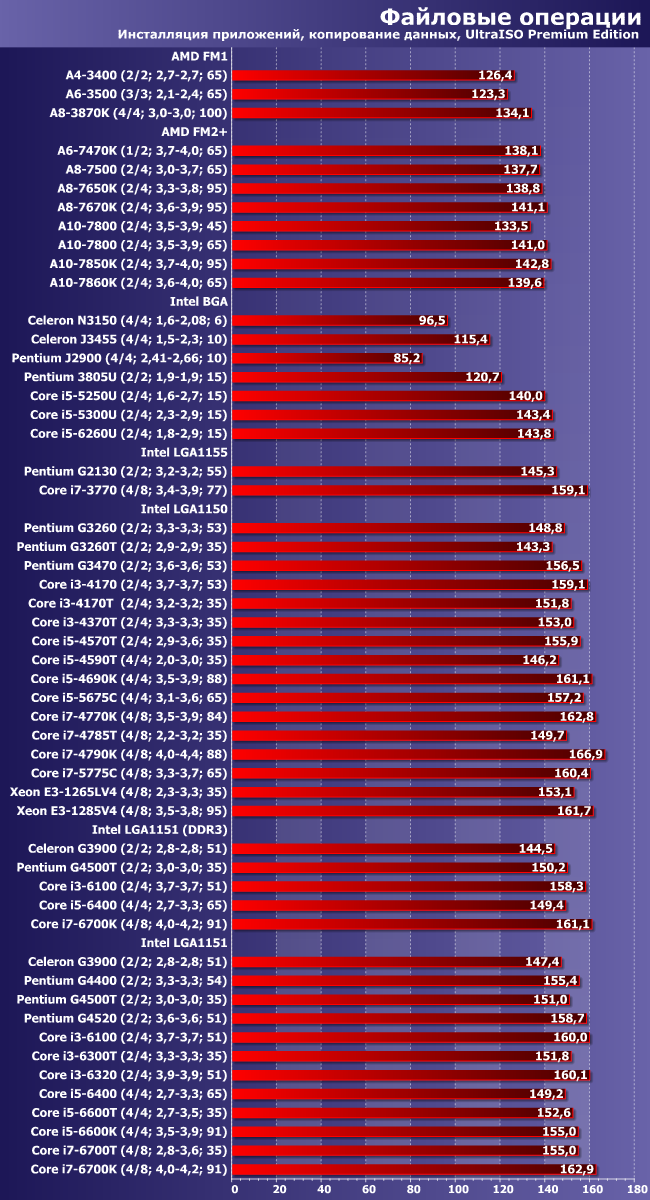

Speed of installation and uninstallation of applications

In principle, we introduced this task into the test methodology mainly because of the need to test ready-made systems: and on the same processor in different environments, as we already know, performance can differ by one and a half to two times. But when the system uses a fast drive and enough memory, the processors themselves do not differ fundamentally from each other. However, surrogate platforms may well turn out to be exactly two to three times slower than “normal” desktop ones. But the latter differ little from each other - be it Pentium or Core i7. Essentially, all that may be needed from a processor is one thread of calculations with maximum performance. But if we put it aside mobile systems, this is almost always done to approximately the same extent.

File operations

And these are especially “platform-cumulative” tests rather than processor tests. As part of this line of tests, we use the same drive - with all that it implies. But the “platform” can matter - for example, the results of LGA1156 turned out to be a bit of a surprise: it seems not the worst desktop solution, which until recently could be considered even fast (the LGA775 still found among users is even worse), but it turned out that under such loads it can only be compared with Bay Trail or Braswell. And even then, the comparison will not be in favor of the “old lady” who was once close to the top level. But modern budget systems are practically no different from non-budget ones - simply because the former are already enough for performance to begin to be determined by other components of the system, without being limited by the processor or even the chipset.

Total

In principle, we made the main conclusions about processor families directly in the reviews, so they are not required in this article - this is primarily a generalization of all previously obtained information, nothing more. And generalizations, as we see, can sometimes turn out to be interesting. Firstly, it is easy to notice that the influence of discrete video cards on performance in mass-produced programs can, in general, be considered absent. More precisely, in some applications it is, but being “spread out” across all tests, it quietly and peacefully evaporates. In any case, this is true for more or less modern platforms - it’s easy to see that weak integrated graphics from the LGA1155 era, even overall, can reduce the results by five percent, which is more or less noticeable, although not critical. The same should apply to older discrete video cards, which will also be inferior to slightly newer ones, but in this case, the border between “good” and “bad” solutions is no longer pushed back by three, but by five or more years from the current moment. In short, modern platforms are free of such problems. So for a quality comparison it is not at all necessary to require the same video part, which means that if you need, for example, to compare a laptop with a desktop system, we find a suitable article about a laptop (not even necessarily about that same laptop - another one on a similar platform will do) and compare. The data storage system is even more important, so if there is no parity in the articles on it, you will have to limit yourself to the results of groups of tests that do not depend on the drive. As for video... Let us repeat: among the mass applications there are no such strongly tied to it, but gaming applications are a completely different story.

Now let's try (as usual) to look at the range of performance that we managed to cover this year. The minimum result in the overall standings is the Celeron N3150: 54.6 points. The maximum is for the Core i7-6700K: 258.4 points. “Professional” platforms like LGA2011/2011-3 failed to take first place, although in some tests its “multi-core” representatives were confidently in the lead. The reasons for this have been voiced more than once: manufacturers of mass software mainly focus on the fleet of equipment available to users, and not at all on some “shiny peaks”. There are (and always have been and always will be) such tasks for which computing resources are “always in short supply”, and it is for them that top-end systems are required (sometimes going far beyond the scope of our testing), but the bulk of problems can be easily solved using mass computer. Often even outdated.

In this regard, it is interesting to compare the current “Results” not with the past ones, but with the ones before last. Then testing was done according to a completely different scheme - always using a powerful discrete video card. And there were more professional applications, so the top six-core processors, in general, still turned out to be faster than best solutions for mass platforms. However, at the same time, the Core i7-4770K scored 242 points - which is just comparable to 258.4 for the Core i7-6700K (from the point of view of time-adjusted positioning, these processors are the same: one was the most quick solution for the mainstream LGA1150 2013, and the second - the same in 2016 for LGA1151). At the same time, both then and now, various Pentium/Core i3/Core i5 were pushed in the range of 100-200 points - nothing has changed. Except that the scores have changed: the software was mentioned above, but the standard has also changed. Previously it was AMD Athlon II X4 620 (budget, but desktop and quad-core processor) with discrete Nvidia-based graphics card GeForce GTX 570. And now this is an (ultrabook) Intel Core i5-3317U without any discrete data. It seems like everything is different. But in practice it’s the same: a budget desktop gives a hundred points, any investment in it, at best, can increase productivity (on average for task classes) by two and a half times, and a compact nettop on a surrogate platform will work two to three times slower. This is the state of affairs in the segment desktop computers has been established and has been preserved for a long time, which is clearly shown by our summary results. In general, when going to the store to buy a new computer, you don’t need to read any articles - just analyze the amount of money in your wallet :)

When are tests still needed? Basically - when the task arises to change old computer on new. Especially when you plan to “move to another class”: by changing the desktop to a nettop or laptop, for example. When purchasing a new solution of the same class, you don’t have to worry: new Core i5, for example, will always be faster than an old one of the same class, so there is no great need for accurate estimates of “by how much”. But the fact that the performance of processors for various purposes is slowly but surely growing can lead to pleasant surprises - when, for example, it turns out that an old desktop can easily replace an ultrabook, and without any negative consequences. Well, as we see, this is quite possible, since everyone “grows”.

Results of testing central processors using the methods of the 2016 version

Part 1: 53 configurations with integrated graphics

The change of year on the calendar, as a rule, leads to an update of computer system testing methods, and therefore to a summing up of the results of central processor testing (which is a special case of system testing) carried out in the past year. In principle, we received the bulk of the results long before the end of the year, but we wanted to add the “seventh generation” Core to the results (at least in limited quantities). Unfortunately, it was not possible to do this: the “original” method used in the tests according to the 2016 method Windows version 10 is incompatible with Intel graphics drivers suitable for HD Graphics 630. More precisely, of course, the opposite: this driver requires at least the Anniversary Update. In principle, there is nothing new in this, latest versions graphic Nvidia drivers, for example, behave similarly, but changing the software set test bench violates the concept of tests “in the closest possible conditions”. However, tests of new processors using the 2017 method have already shown that there is nothing truly “new” in them - as expected. Therefore, it is possible to do without the results of “Skylake Refresh” for now, which is what we will do.

The second point that should also be taken into account is the number of subjects. Last year's results presented the results of 62 processors, 14 of which were tested with two “video cards” - an integrated GPU (different for everyone) and a discrete Radeon R7 260X, and four with different types memory. In total there were 80 configurations. It’s not that difficult to “shove” them all into one article (after all, not so long ago we had 149 test configurations in one article ), but the diagrams were, to put it mildly, not very convenient to view. In addition, there is no great need for a direct comparison of the “atomic” Celeron N3150 and the extreme ten-core Core i7-6950X either: this is still fundamental different platforms. The “vastness” of the final articles using the “old” methods was mainly due to the fact that in the main line of tests all participants worked with the same discrete video card, but this approach was not always applicable before - as a result, some computer systems had to be removed into a separate line of tests, and then summarize individual testing results.

This year we decided to do the same. Today's article will present results from 53 different configurations: 47 processors, five of which were tested with two different types of memory, and one with different TDP levels. But everything is done exclusively using the integrated GPU (also different for everyone). To some extent, this is a return to the results of 2014 - only there are more results. And in the near future, those who wish will be able to familiarize themselves with summary material based on testing 21 processors with the same Radeon R9 380. Some of the participants overlap, and in general the test results are “compatible” with each other, but to improve their perception, it seems to us, better two separate materials. Those readers who are only interested in dry numbers can (and for quite a long time) compare them in any set using the traditional one, which, by the way, also includes information on several “specialized” tests, adding which to the final materials is somewhat difficult.

Test bench configuration

Since there are many subjects, it is not possible to describe their characteristics in detail. After thinking a little, we decided to abandon the usual short table: anyway, it is becoming too vast, and at the request of the workers, we still put some parameters directly on the diagrams, just like last year. In particular, since some people are asking to indicate right there the number of cores/modules and computational threads running simultaneously, as well as operating clock frequency ranges, we tried to do just that, adding information about the thermal package at the same time. The format is simple: “cores (or modules)/threads; minimum-maximum core clock frequency in GHz; TDP in Watts.”

Well, all other characteristics will have to be looked at in other places - the easiest way is from manufacturers, and prices - in stores. Moreover, prices for some devices are still not determined, since these processors themselves are not available in retail (all BGA models, for example). However, all this information is, of course, in our review articles devoted to these models, and today we are engaged in a slightly different task than the actual study of processors: we collect the data obtained together and look at the resulting patterns. Including paying attention to the relative position not of processors, but of entire platforms that include them. Because of this, the data in the charts is grouped by platform.

Therefore, all that remains is to say a few words about the environment. As for memory, the fastest one supported by the specification was always used, with the exception of the case that we called “Intel LGA1151 (DDR3)” - processors running LGA1151, but paired with DDR3-1600, and not the faster (and “main” according to specifications) DDR4-2133. The amount of memory has always been the same - 8 GB. System storage () is the same for all subjects. As for the video part, everything has already been said above: this article used exclusively data obtained with the built-in video core. Accordingly, those processors that do not have it are automatically sent to the next part of the results.

Testing methodology

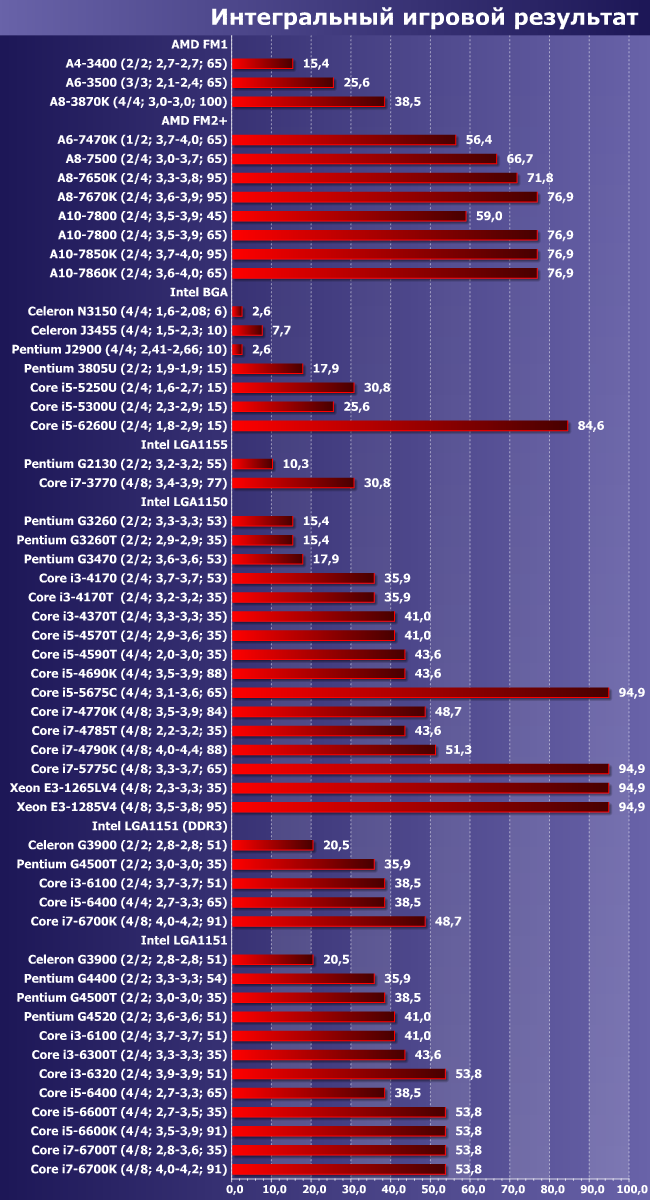

The technique is described in detail. Here we will briefly inform you that the main ones for the results are two “modules” out of four standard ones: and . As for gaming performance, it, as has been demonstrated more than once, is mainly determined by the video card used, so, first of all, these applications are relevant specifically for GPU tests, and discrete ones at that. For serious gaming application Discrete video cards are still needed, and if for some reason you have to limit yourself to IGP, then you will have to take a responsible approach to choosing and configuring the game for a specific system. On the other hand, our “Integral Game Result” is quite suitable for quickly assessing the capabilities of integrated graphics (first of all, this is a qualitative, not a quantitative assessment), so we will also present it.

Let's make detailed results of all tests available in the form. Directly in the articles, we use relative results, divided into groups and normalized relative to the reference system (as last year, a laptop based on Core i5-3317U with 4 GB of memory and a 128 GB SSD). The same approach is used when testing laptops and other ready-made systems, so that all results in different articles (using the same version of the technique, of course) can be compared, despite different environments.

Working with video content

This group of applications traditionally gravitates towards multi-core processors. But when comparing formally identical models from different years of production, it is clearly visible that the quality of the cores is no less important here than their quantity, and the functionality (primarily) of the integrated GPU is also important here. However, fans maximum performance“there’s still nothing to please us with: AMD has never played in this market (even the company’s plans include the most fast processors IGP will be deprived), and for Intel these are solutions for LGA115x, where the performance per thread and clock frequency gradually increases with the platform number, but while maintaining the formula “four cores - eight threads”, and the frequencies cannot be said to be increasing very actively. Eventually Core comparison The i7-3770 and Core i7-6700K give us a 25% increase in performance over five years: the same notorious “5% per year” that people usually complain about. On the other hand, in the Pentium G4520/G2130 pair the difference is already quite significant 40%, and new models of these processors for LGA1151 have acquired Hyper-Threading support, so they behave like the Core i3-6100 with all that it implies. In the field of nettop-tablet solutions, there is still room for intensive methods of increasing productivity, which is brilliantly demonstrated by the Celeron J3455, which is already outperforming some fully desktop processors. In general, progress in different market segments comes with at different speeds, but the reasons for this have long been and repeatedly voiced: desktop computers have ceased to be the main purpose, and the times when it was necessary to increase productivity at any cost, since it was, in principle, not enough to solve the problems of mass users, also ended in the last decade. There are, of course, server platforms, but (again, unlike the situation at the end of the last century), this has long been a separate area, where considerable attention is also paid to efficiency, and not just performance.

Digital Photo Processing

We continue to observe similar trends, adjusted for the fact that Photoshop, for example, has only partial multi-threaded optimization. But some of the filters used actively use new sets of commands, so to some extent one compensates for the other in the case of budget desktop processors, but not “atomic” » platforms. In general, there is an increase in performance over a long time interval, and with a certain devaluation of old processor families (Core i7 for LGA1155 is approximately Core i5 for LGA1151), but the global “breakthroughs” that some “potential buyers” dream of have been around for a long time not anymore. Perhaps they are not there because changes generally occur only in the Intel assortment, and even those are planned :)

Vector graphics

From using Adobe Illustrator to new version We abandoned the methodology, and the final diagram clearly shows the reason for this decision: the last thing this program was seriously optimized for was Core 2 Duo, so for work (note: this is not a household application, and a very expensive one), a modern Celeron or a five-year-old one is quite sufficient Pentium, but even if you pay seven times more, you can only get one and a half times the speedup. In general, although in this case the performance is interesting to many, there is no point in testing it - in such a narrow range it is easier to assume that all colas are the same:) Only “nuclear” solutions are “in flight” - it’s not for nothing that it was said about them for 10 years in a row that they are intended for consuming content, and not for producing it.

Audio processing

Adobe Audition is another program that, starting this year, is leaving the list of those we use in testing. The main complaint against it is the same: the “required level of performance” is achieved too quickly, and the “maximum” differs too little from it. Although the difference between Celeron and Core i7 in each iteration of LGA115x is already approximately twofold, it is easy to see that most of it is still “made up” within, if not budget, then inexpensive processor lines. Moreover, the above is true only for Intel processors - to today's AMD platforms The application is generally somewhat biased.

Text recognising

The times of rapid progress in character recognition technologies are long gone, so the corresponding applications are developed without changing the basic algorithms: they, as a rule, are integer and do not use new instruction sets, but they scale well in terms of the number of computational threads. The second provides a good spread of values within the platform - up to three times, which is close to the maximum possible (after all, the effect of code parallelization is usually not linear). The first does not allow us to notice a significant difference between processors of different generations of the same architecture - a maximum of 20 percent over five years, which is even less than the “average”. But processors of different architectures behave differently, so this application continues to be an interesting tool.

Archiving and unarchiving data

Archivers have also, in principle, reached such a level of productivity that in practice you can no longer pay attention to their speed. On the other hand, they are good because they quickly respond to changes in performance characteristics within the same processor family. But comparing them differently is a dangerous task: the fastest among those we tested (of those included in today’s article, of course) turned out to be the Core i7-4970K for an already formally “outdated” platform. And not everything is going smoothly in the “atomic” family either.

File operations

The diagram clearly shows why, from 2017, these tests will no longer be taken into account in the overall score and will “go” into their own: with the same fast drive, the results are too even. In principle, this could have been assumed a priori, but it didn’t hurt to check. Moreover, as we see, the results are smooth, but not perfectly smooth: “surrogate” solutions, low-end mobile processors and old AMD APUs do not squeeze the maximum out of the SSD used. In their case, SATA600 is supported, so no one seems to be stopping you from copying data at least at the same speed as “adult” platforms, but there is a decrease in performance. More precisely, it was until recently, but now it ceases to matter.

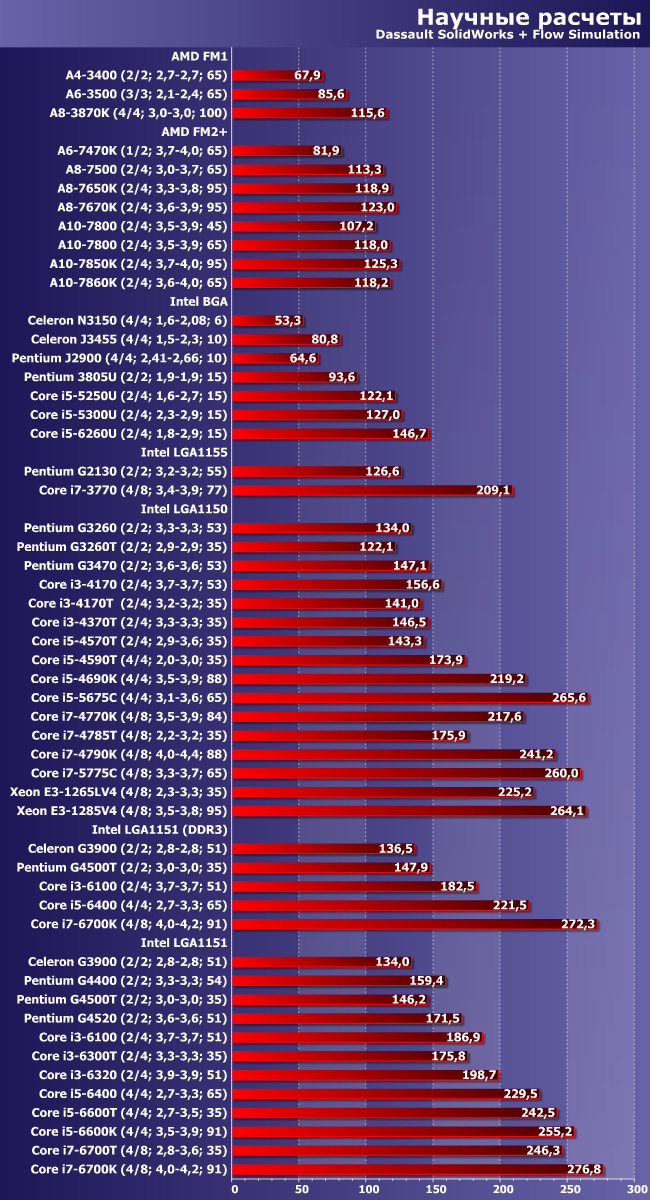

Scientific calculations

About using SolidWorks Flow Simulation for testing budget systems Questions regularly arose in the forum, but in general the results of this program are quite interesting: as we see, it scales well across cores, but only across “physical” ones - various SMT implementations are contraindicated for it. From a methodological point of view, the case is interesting, but not unique; while most of the programs in our set are, if they are multi-threaded, then fully multi-threaded. But overall, the results of this scenario fit into the overall picture.

iXBT Application Benchmark 2016

![]()

So, what do we have in the bottom line? Mobile processors are still a thing in themselves: they have the same performance as desktop processors, but of lower classes. There is nothing unexpected in this - but their energy consumption is significantly lower. The performance increase between similarly positioned desktop Intel processors over five years is 20-30%, and the more “top-end” the family, the slower it grew. This, however, does not in any way interfere with “social justice”: it is precisely in the budget segment that more is needed high performance, as well as more powerful graphics (there may simply not be enough money for discrete ones). In general, thrifty buyers are lucky - one might say that the primary focus on laptop computers has also contributed to budget desktops. And not only in performance and purchase price, but also in cost of ownership.

In any case, this is true for Intel solutions - the second remaining manufacturer of x86 processors on the market has been doing worse in recent years, to put it mildly. FM1 is a five-year-old solution, FM2+ until the end of 2016 remained the company’s most modern and powerful integrated platform, but they differ... literally by the same 20% as different Core generation i7. However, it cannot be said that nothing has changed at all over the past years: the graphics have become more powerful, and energy efficiency has increased, but gaming has remained the main niche of these processors. Moreover, for graphics performance at the level of low-end discrete video cards, you have to pay with both low performance of the processor part and high energy consumption - which is what we are just moving on to.

Energy consumption and energy efficiency

In principle, the diagram clearly explains why budget processors “grow” in speed faster than “non-budget” ones: power consumption is more limited than, generally speaking, necessary for desktop computers (although this is better than the horrors of the 90s and 2000s), but also the relative share of “full-size desktops” has also declined greatly over the years and continues to fall. And for laptops or tablets, even older “atomic” models are no longer very comfortable - not to mention quad-core Core ones. Which, in a good way, is long overdue to be made a main mass product - you see, the software industry will find a useful use for such power.

Let us note that not only efficiency increased, but first of all energy efficiency increased, since it took more time to solve any problem in the same or even less time. modern processors spend less energy. Moreover, working quickly is useful: you will be able to stay in energy-saving mode longer. Let us recall that these technologies began to be actively used in mobile processors- when did such a division even exist, because now all processors are like this to a certain extent. AMD has the same trend, but in this case the company failed to repeat the success of at least Sandy Bridge, as a result of which the most “tasty” market segments were lost. Let's hope that the release of processors and APUs based on a new microarchitecture and a new technical process will solve this problem.

iXBT Game Benchmark 2016

As stated in the description of the methodology, we will limit ourselves to a qualitative assessment. At the same time, let’s recall its essence: if the system demonstrates a result above 30 FPS at a resolution of 1366×768, it receives one point, and for the same thing at a resolution of 1920×1080, it receives two more points. Thus, given that we have 13 games, the maximum score could be 39 points - it does not mean that the system is gaming, but such a system at least copes with 100% of our gaming tests. It is by the maximum result that we will standardize all the rest: we calculated the points, multiplied by 100, divided by 39 - this will be the “Integral game result”. For really gaming systems, it is not needed, since everyone there is more interested in the nuances, but for assessing “universal” ones it is quite suitable. It turned out to be more than 50, which means that sometimes you can play something more or less comfortably; about 30 - even lowering the resolution will not help; Well, if it’s 10-20 points (not to mention zero), then it’s better not to even mention games with more or less 3D graphics.

As we can see, with this approach everything is simple: only AMD APUs for FM2+ (most likely FM2) or any Intel processors with fourth-level cache (with eDRAM) can be considered “conditionally gaming” solutions. The latter are faster, but quite specific: firstly, they are quite expensive (it’s easier to buy inexpensive processor and a discrete video card, which will provide higher comfort in games), secondly, most of them have a BGA design, so they are sold only as part of ready-made systems. AMD, on the other hand, plays on a different field - its desktop A8/A10 are practically no alternative if you need to build a computer that is more or less suitable for games, but has a minimal cost.

Others Intel solutions, as well as younger (A4/A6) and/or outdated AMD APUs, it is better not to consider them as gaming solutions at all. This does not mean that their owner will have absolutely nothing to play - but the entire range of available games will also include either old or applications that are undemanding in terms of graphics performance. Or both at once. For other things, they will have to purchase at least an inexpensive discrete video card - but not the cheapest, since “low-end” solutions (as has been shown more than once in the relevant reviews) are comparable to the best integrated solutions, that is, money will be wasted.

Total

In principle, we made the main conclusions about processor families directly in their reviews, so they are not required in this article - this is primarily a generalization of all previously obtained information, nothing more. More precisely, almost all of them - as mentioned above, we have postponed some systems for a separate article, but there will be fewer of them there, and the systems will be less widespread. The main segment is here. In any case, if we talk about desktop systems, which now come in different designs.

Generally speaking, the past year, of course, was quite poor in terms of processor events: both Intel and AMD in the mass market continued to sell what debuted in 2015, or even earlier. As a result, many participants in these and last year’s results turned out to be the same - especially since we tested the “historical” platforms once again (we hope that for the last time :)) But the slowest last year was the Celeron N3150: 54.6 points, and the fastest - Core i7-6700K: 258.4 points. In this regard, the positions did not change, and the results actually remained the same - 53.5 and 251.2 points. The top-end system had it even worse :) Note: this is despite a significant reworking of the software used, and precisely in the direction of the most demanding tasks on the computer’s performance. The budget “old man” in the person of the Pentium G2130, on the contrary, grew from 109 to 115 points over the year, just as the “non-budget old man” Core i7-3770 began to look even a little more attractive than before after a software update. On this, in fact, the idea of acquiring “productivity for the future” can be closed - if someone has not already done this;)