Using Key Collector, you can not only significantly simplify the procedure for collecting the semantic core for advertising campaign, but also to get the most complete and high-quality results and analysis.

It is worth noting that this program It does not work with ready-made databases and does not generate key phrases, but instead allows you to collect relevant information directly from source services.

The application allows you to obtain information from most popular Russian-language and foreign sources, with the help of which you can obtain the most complete selection of high-frequency, mid-frequency and, of course, low-frequency phrases.

You can work with the results obtained either without leaving the program or by exporting them to the format Microsoft Excel or CSV.

A convenient classic tabular presentation of data with filtering capabilities and additional pop-up editors allows you to analyze additional information.

Key Collector is actively used not only in contextual advertising, but also in SEO.

What can you do with Key Collector?

Setting up Key Collector for Wordstat data parsing Yandex

First you need to download - buy the program on the following website: http://www.key-collector.ru/.

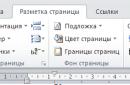

- 1 Go to the program settings, but to do this you need to click on the gear located in the block of main program tools, in the left top corner, in accordance with Figure 1.

Rice. 1 - Setting up the program

- 2 Select the “Parsing” tab, in which there will be several more tabs, from which we select Yandex.Direct.

Rice. 2 - Setting up Yandex.Direct parsing

- 3

We are setting up an account; to do this, you need to create an email on Yandex, one that will be intended only for this program, so that it does not suddenly get blocked, in accordance with Figure 3.

It is worth noting that mandatory authorization is required through accounts created in the Yandex Direct service.

Rice. 3 - Setting up a Yandex.Direct account

- 4 After everything is set up, you need to start a new project, to do this you need to click “New Project” and give it a name according to Figures 4 and 5.

Rice. 4 - Getting started

Rice. 5 - Project name

- 5 We indicate the parsing region, in this case the region is “Ekaterinburg”. To do this, you need to click on the corresponding input field at the bottom of the program, opposite the red histogram and select the required city, in accordance with Figures 6 and 7.

Rice. 6 - Region selection

Rice. 7 - Select a region, for example Ekaterinburg

- 6 Launch the program for parsing data from Yandex Wordstat; to do this, click on the icon in the toolbar in the form of a red histogram, in accordance with Figure 8.

Rice. 8 - Launch for parsing

- 7 V open window, enter a list of the main high-frequency or mid-frequency key phrases that were manually selected to compile the semantic core of queries and click on the “Start collection” button, in accordance with Figure 9.

Rice. 9 - Dialog box for entering key phrases

- 8

It will take some time for the program to finish collecting data.

The result is a list of a large number of words, in accordance with Figure 10.

Collection time depends on the selected region, as well as keywords, it turns out that the time can take from several minutes to several hours.

Rice. 10 - List of collected all key phrases

- 9

We clean up irrelevant and ineffective words that will not produce results for a future advertising campaign.

Of course, you can manually filter out phrases, click on each one and sift out, but this will take a lot of time, especially when there are several hundred thousand phrases. Therefore, we will use a special stop word filter for this, which will reduce the time. You must click on the stop word icon in the program interface, in accordance with Figure 11.

Rice. 11 - Stopword filter icon

- There are 2 tabs with a list 1 and 2. The first list contains unnecessary stop words that are in no way related to the project, the second tab, on the contrary, includes those words that are beneficial for the project, in accordance with Figure 12.

Rice. 12 - Two list tabs 1 and 2 “stop words”

- 10 We set the settings as shown in the screenshot, such settings allow you to search for matches in all phrases, searching through all the words of the phrases, if the phrase partially matches the stop word, such key phrases will be highlighted in the general table, naturally, if you click the button: “Mark in table”, in accordance with Figure 13. Next, click “Mark phrases” in the table.

Rice. 13 - Setting up a “safe word”

- 11

Once the “stop words” have been identified, the phrases highlighted in the table can be safely deleted by selecting the “Data” tab and clicking on “Delete marked phrases”, in accordance with Figure 14.

As a result, the table will contain those words that will be significantly more effective for the project.

Rice. 14 - Removing unnecessary phrases

- 12 We take the exact frequencies of key phrases in order to weed out dummy words. To do this, we use Yandex.Direct statistics, which allows you to collect data in batches, in accordance with Figure 15.

Rice. 15 - Collecting the frequency of key phrases

The following screenshot shows the data sorted by the second column “Frequency” of requests, in accordance with Figure 16.

Rice. 16 - Frequency ""

- 13 Next, we upload all the key phrases into Excel format for further work on the phrases, in accordance with Figure 17.

Rice. 17 - Export key phrases to Microsoft Excel or CSV format

Collection of seasonality

The program allows you to collect information about the popularity of a query over the past period, build a graph based on this data, and make an assumption about the seasonality of a given query based on the data obtained.

To collect information about the seasonality of the request, click the button with the graph icon in the group of buttons “Collecting keywords and statistics”, in accordance with Figure 18.

Rice. 18 - Seasonality icon

When collecting information about the seasonality of a request, the values of the arithmetic mean frequency and its median are also calculated. You can change the period during which statistics are considered to calculate these values in the Yandex.Wordstat collection settings.

If necessary, you can get statistics grouped by weeks rather than by months. In this case, the launch should be done through the corresponding item in the drop-down menu of the Yandex.Wordstat seasonality data collection button, in accordance with Figure 19.

Rice. 19 - Type of seasonality in the table

You can view extended information about seasonality by clicking on the cell corresponding to this phrase, in accordance with Figure 20.

Rice. 20 - Seasonality chart

If necessary, you can export extended frequency data for all phrases to a CSV file. To do this, you need to use the corresponding button in the drop-down menu of the seasonality collection start button.

Collection of statistics from counters of the Yandex.Metrika statistics system

The program supports collecting statistics from counters of the Yandex.Metrika statistics system. Using Key Collector, you can collect words and traffic from a specified counter.

The process of collecting statistics from the Yandex.Metrika counter

- 1 Click the button with the service logo in the “Collection of keywords and statistics” button group and enter authorization data in the statistics system, in accordance with Figures 21 and 22.

Fig. 21 - Button for collecting statistics from the counter of the Yandex.Metrika statistics system

* To collect Yandex.Metrika statistics, you must log in to an account that has access to the counters from which statistics need to be collected. The program supports both regular and batch collection of Yandex.Metrika statistics. When using regular collection, you can either select the required site from the drop-down list or enter its ID manually.

- 2 Select the period for which you want to obtain statistics. You can enter the period yourself or use a template (quarter, year, etc.), in accordance with Figure 22.

- The option "Update statistics for phrases existing in the table" allows you to update conversion statistics for phrases that previously existed in the table. For example, earlier in the table the phrase “pen” was added. If the option is disabled, and although this phrase appears in the report, the program will not record the transition value for it. If the option was enabled, the program will update this value.

- The option "Do not add new phrases to the table" is an addition to the previous option. By turning it on, the program is prohibited from adding phrases to the table that were not there before. This can be useful if you simply need to update or collect click-through data for previously collected statistics, without diluting the list of phrases in the table with new phrases, which may then require additional processing.

- 3 Select the method of obtaining statistics: directly using the API or daily using the program, in accordance with Figure 22.

- In the first case, the program simply generates a request to the Yandex.Metrika API, passing the boundaries of the collection period in the parameters. In response, she receives a list of phrases with conversion statistics, which can be immediately recorded in a data table. This mode is faster, but as a result, some low-frequency phrases may not be received due to the specifics of the API itself.

- In the second case, the program views statistics for the specified period manually on a daily basis, and then, when the collection is completely completed, calculates the values of transitions. Daily viewing in parts sometimes allows you to get more phrases that the API in normal mode does not produce (low-frequency phrases), but it takes significantly more time. It should also be taken into account that if the collection process was interrupted, then transition and failure statistics will not be calculated. Therefore, when working with this mode, you should wait until the collection process is complete.

- The option “Do not add a phrase if it is already on any other tabs” can be useful if you do not want the table to not include phrases that have already been processed on other tabs.

Collection of statistics from counters of the Google.Analytics statistics system

The Key Collector program supports collecting statistics from counters of the Google.Analytics statistics system.

Using it, you can collect words, number of visits, bounce percentage and landing pages from a specified counter.

The process of collecting statistics from the Google.Analytics counter.

- 1 Click the button with the service logo in the group of buttons “Collecting keywords and statistics” and after that the statistics collection window will open Google Analytics, in accordance with Figure 23.

Fig.23 - Button for collecting statistics from the counter of the Google.Analytics statistics system

*To collect Google Analytics statistics, you must provide a login and password for an account that has access to the counters from which statistics will be collected. If desired, you can enable the option "Save authorization data in program settings."

After entering your login and password, click on the drop-down list with sites and select the counter whose statistics are of interest.

Then we select the period for which we collect statistics.

You can enter the period yourself or use a template (quarter, year, etc.), in accordance with Figure 24.

- The option "Update statistics for phrases existing in the table" allows you to update conversion statistics for phrases that previously existed in the table.

- The option "Do not add new phrases to the table" is an addition to the previous option. By turning it on, you can prevent the program from adding phrases to the table that were not there before. This can be useful if you just need to update or collect click-through data for previously collected statistics, without diluting the list of phrases in the table with new phrases, which may then require additional processing.

- 2 You can also choose the method of obtaining statistics: directly using the API or daily using the program, in accordance with Figure 24.

- In the first case, the program simply generates a request to the Google.Analytics API, passing the boundaries of the collection period in the parameters. In response, she receives a list of phrases immediately with statistics about conversions, % of bounces and landing pages, which can be immediately recorded in a data table. This mode is faster, but as a result, some low-frequency phrases may not be received due to the specifics of the API itself.

- In the second case, the program views statistics for the specified period manually on a daily basis, and then, when the collection is complete, calculates the conversion values and % of failures. Daily viewing in parts sometimes allows you to get more phrases that the API does not normally produce (low frequency phrases), but this takes significantly more time. It should be taken into account that if the collection process was interrupted, then transition and failure statistics will not be calculated. Therefore, when working with this mode, you should wait until the collection process is complete.

- The option “Do not add a phrase if it is already on any other tabs” can be useful if you need to prevent phrases from entering the table that have already been processed on other tabs.

Search tips

The program supports the collection of search tips from six popular search engines: Yandex, Google, Mail, Rambler, Nigma, Yahoo, Yandex.Direct.

In order to collect search tips from search engines of interest, click the button with the icon of three multi-colored honeycombs in the “Collection of keywords and statistics” button group, in accordance with Figure 25.

Rice. 25 - “Search hints” button

In the batch word entry window that opens, you can enter phrases of interest manually or load them from a file. In this case, you can choose where you want to place the parsing results for each of the input phrases: on the current tab or distributed over several tabs. After this, check the boxes search engine, in which you should search, and click on the button to start collecting information (in order for the "Yandex.Direct" checkbox to become available, you must first register one or more accounts in "Settings - Parsing - Yandex.Direct"), in accordance with Figure 26.

Fig. 26 - Window for batch input of words for collecting search tips

It is worth noting that collecting tips from Yandex.Direct has a very small limit on the number of requests. It is recommended to use the collection of search suggestions from Yandex.Direct only for a limited number of phrases if necessary.

The “With selection of endings” option allows you to collect even more hints due to the fact that the program will select the endings of words automatically.

Iterating over endings is useless if complete words are specified as the initial words, in accordance with Figure 27.

Fig. 27 - Setting up “search tips”

It is worth noting that you do not need to enable the option to select endings unless clearly necessary, because its use greatly affects the number of requests made and the total time it takes to complete a task.

Key Collector supports collection similar search queries from search results PS Yandex, Google, Mail.

In order to collect search tips from search engines of interest, click the button in the “Collect keywords and statistics” button group, in accordance with Figure 28.

Fig.28 - Button "Collect keywords and statistics"

In the batch word entry window that opens, you can enter phrases of interest manually or load them from a file. In this case, you can choose where you want to place the parsing results for each of the input phrases: on the current tab or distributed over several tabs. After this, you need to check the boxes of the search engine in which you want to search, and click on the button to start collecting information, in accordance with Figure 29.

Fig.29 - Batch word input window

Calculating the best word form

In order to collect the best word forms for existing key phrases, click on the button with the service logo in the “Collection of keywords and statistics” button group and select the appropriate item in the button’s drop-down menu, in accordance with Figure 30.

Fig.30 - Button "Collect keywords and statistics"

Collection of extensions for key phrases

In order to start collecting extensions (new key phrases) from the existing list of phrases, click on the button with the service logo in the “Collection of keywords and statistics” button group and select the appropriate item in the button’s drop-down menu, in accordance with Figure 31.

Fig.31 - Button "Collect keyword extensions"

In the batch entry window that opens, you can enter words of interest manually or load them from a file. In this case, you are given a choice of where to place the parsing results for each of the input phrases: on the current tab or distributed over several tabs. After clicking the start process button, the program will begin collecting data for the specified key phrases, in accordance with Figure 32.

Fig.32 - Batch word input window

If you work with huge projects (tens or hundreds of thousands of phrases) and collect phrases in batch mode, then the option “Do not update table contents after group insert and update operations during parsing” in “Settings - Interface - Other” may be useful.

So, this article describes the capabilities of Key Collector for contextual advertising, as well as a working method of how you can create a semantic core (creating a semantic core is also necessary in SEO) for an advertising campaign using key phrases parsed from Yandex Wordstat.

Also, we can identify dummy words that will be ineffective for the Republic of Kazakhstan.

Key Collector is one of the main SEO tools. This program, created to select a semantic core, is included in the category of must-have tools for promotion. It is as important as a scalpel for a surgeon or a steering wheel for a pilot. After all, it is unthinkable without keywords.

In this article we will look at what Key Collector is and how to work with it.

What is Key Collector for?

Then go to settings (gear button in the panel in the upper left corner of the program window) and find the “ Yandex.Direct«.

Click on the button Add by list" and enter the created accounts in the format Login: Password.

Attention! add @yandex.ru after login no need!

After all the operations you will get something like this:

But that's not all. Now you need to create an account Google AdWords, which will be tied to this account Google. Without an AdWords account, it will be impossible to receive data on keywords, since that is where they come from. When creating an account, select your language, time zone, and currency. Please note that this data will not be availablechange.

After creating your AdWords account, open the Key Collector settings again and the “ Google.AdWords". Here in the settings it is recommended to use only one Google account.

Anticaptcha

This point is not mandatory, but I still recommend using anti-captcha. Of course, if you like to enter the captcha manually every time, it's up to you. But if you don’t want to waste your time on this, find the “Anti-captcha” tab in the settings, turn on the “Antigate” radio button (or any other of the proposed options) and enter your anti-captcha key in the field that appears. If you don't have a key yet, create one.

Captcha recognition is payable service, but 10 dollars is enough for at least a month. Moreover, if you do not parse search engines every day, this amount will be enough for a year.

Proxy

By default, the program uses your main IP address for scraping. If you don’t need to use Key Collector often, you can forget about the proxy settings. But if you often work with the program, search engines may often give you a captcha and even temporarily ban your IP. In addition, all users who access the Internet under a common IP will suffer. This problem occurs, for example, in offices.

Users from Ukraine may also experience difficulties parsing Yandex from the main IP.

Finding free proxies that are still not banned by search engines can be quite difficult. If you have a list of such addresses, enter them in the settings in the " tab Net". Then click on the button Add line«.

Another option is to create a file with addresses in the format IP:port, copy them to the clipboard and add them to the collector using the " Add from clipboard«.

But I recommend connecting to a paid VPN from hidemy.name. In this case, an application is installed on the computer that turns the VPN on/off on demand. In this application you can also change the proxy itself and its country. Additionally, you don't have to configure anything. Just turn on the VPN and work comfortably with the Collector.

I have listed the basic settings that are needed to get started. I advise you to go through all the tabs yourself and study the program settings. Maybe you will find items in the settings that will be right for you.

Selection of keywords with Key Collector

Finally, we have reached the actual selection of the semantic core. In the main program window, click on the big button “ New project". I advise you to name the project file with the name of the site, for example, site.ru, and save it in a specially created folder for Key Collector projects, so as not to waste time searching later.

The Collector makes it convenient to sort keywords into groups. It’s convenient for me when the hierarchy of groups in a project corresponds to the future one, so the first group (the default group) corresponds to me home page site.

For example, let’s work with the topic “website creation Moscow”. Let's start with Yandex.

First you need to set the region:

Now you need to open " Batch collection of words from the left column of Yandex.Wordstat” and in the window that appears, enter 5 of the most obvious key phrases in this topic (parsing will be done on their basis).

Now you need to click on the button Start collecting«.

That's it, you can go make coffee or switch to other tasks. Kay Collector will take some time to parse the key phrases.

The result will be something like this:

Safe words

Now you need to filter out inappropriate ones this moment words and phrases. For example, the combination of the words “website creation Moscow for free» will not work, since we do not provide free services. Searching for such phrases manually in the semantic core for hundreds and thousands of queries is extremely exciting, but it’s better to use a special tool.

Then you need to click on the plus sign:

You've probably noticed that the program has a large number of different options when working with keywords. I explain the basic, simplest operations in Key Collector.

Working with request frequency

After filtering by negative keywords, you can start parsing by frequency.

Now we see only the column with the total frequency. To get the exact frequency for each keyword, you need to enter it in Wordstat in the quote operator - “keyword”.

In Collector this is done as follows:

If necessary, you can collect frequency with the “!word” operator.

Then you need to sort the list by frequency "" and remove words with a frequency of less than 10 (sometimes 20-30).

Second way to collect frequency (slower):

If you know for sure that a frequency below a certain value does not interest you, you can set a threshold in the program settings. In this case, phrases with a frequency below the threshold will not be included in the list at all. But this way you can miss promising phrases, so I don’t use this setting and I don’t recommend it. However, use your own discretion.

The result is a semantic core more or less suitable for subsequent work:

Please note that this semantic core is just an example created only to demonstrate how the program works. It is not suitable for a real project, as it is poorly developed.

Right column of Yandex.Wordstat

Sometimes it makes sense to parse the right column of Wordstat (queries similar to “your request”). To do this, click on the appropriate button:

Google and Key Collector

Queries from Google statistics are parsed in a similar way to Yandex. If you have created a Google account and an AdWords account (as we remember, only one Google account is not enough), click on the appropriate button:

In the window that opens, enter the queries you are interested in and start the selection. Everything is similar to Wordstat parsing. If necessary, in the same window indicate additional settings specifically for Google (clicking on the question icon will display help).

As a result, you will receive the following data for AdWords:

And you can continue working with semantics.

conclusions

We've sorted it out basic settings Key Collector (something without which it is impossible to start working). We also looked at the simplest (and most basic) examples of using the program. And we selected a simple semantic core using statistics from Yandex.Wordstat and Google AdWords.

As you understand, the article shows approximately 20% of all program capabilities. To master Key Collector, you need to spend several hours and study the official manual. But it's worth it.

If after this article you decide that it is easier to order a semantic core from specialists than to figure it out yourself, write to me through the page and we will discuss the details.

And a bonus video: a dude named Derek Brown plays the saxophone masterfully. I even went to his concert during the jazz festival, it was really cool.

Dear friends, today I want to talk about how to effectively clean search queries in the Key Collector program http://www.key-collector.ru/.

To clean up the semantic core, I use the following methods:

- Cleaning the semantic core using regular expressions.

- Removal using a list of stop words.

- Removal using word groups.

- Filter cleaning.

Using them will allow you to quickly and efficiently clean up the list of collected keywords and remove all phrases that are not suitable for your site.

To show everything clearly, I decided to record a video tutorial:

It is better to look at the review in full screen mode in 720 HD quality. Also don't forget subscribe to my channel on Youtube so you don't miss new videos.

I'll show you several ways to do this. If you know other ways, please leave a comment. I use all the methods described myself. They save me a lot of time.

So, let's go.

Regular expressions significantly expand the ability to select queries and save time.

Let's say we need to select all search queries that contain numbers.

To do this, click on the indicated icon in the “Phrase” column:

Select the option “satisfies the regex” and insert the following regular expression into the field:

All you have to do is click the "Apply" button and you will receive a list of all queries that contain numbers.

I like to use regular expressions to find search terms that are questions.

For example, if you specify a regular expression like this:

Then we get a list of all queries that begin with the word “how” (as well as with the words “which”, “which”, “which”):

Such queries are great for informational articles, even if the site is commercial.

If you use this expression:

free$

Then we get all requests that end with the word “free”:

Thus, you can immediately get rid of freebie lovers :) No, how can you type the request “free air conditioning”? The thirst for freebies has no boundaries. It’s like in that joke “I’ll accept a Bentley as a gift” 😉 . Okay, we need to be more serious.

If we need to find all phrases that contain letters of the Latin alphabet, then the following expression will be useful:

Here are examples of other regular expressions I use:

^(\S+?\s\S+?)$- all queries consisting of 2 words

^(\S+?\s\S+?\s\S+?)$- consisting of 3 words

^(\S+?\s\S+?\s\S+?\s\S+?)$- consisting of 4 words

^(\S+?\s\S+?\s\S+?\s\S+?\s\S+?)$- of 5 words

^(\S+?\s\S+?\s\S+?\s\S+?\s\S+?\s\S+?)$- of 6 words

^(\S+?\s\S+?\s\S+?\s\S+?\s\S+?\s\S+?\s\S+?)$- of 7 words

^(\S+?\s\S+?\s\S+?\s\S+?\s\S+?\s\S+?\s\S+?\s\S+?)$- of 8 words

Searching for queries consisting of 6 or more words is useful, as they often contain a lot of junk phrases.

The program has another option to find such queries - just select the desired item from the drop-down menu below:

2. List of stop words

To clean up search queries, it makes sense to create a list of unwanted words that you want to remove from the collected queries.

For example, if you have a commercial website, you can use the following stop words:

free

rock

abstract

I deliberately write some words only partially in order to cover all possible options. For example, using the stop word "free" will prevent you from collecting requests containing:

for free

free

The stop word “download” will make it possible not to collect requests that include:

download

sway

In the Kay Collector program, in the “Data collection” tab, go to the “Stop words” item:

And add unwanted words through the “Add as a list” or “Load from file” options:

By going to the main program window, we will see how many requests are marked for the specified stop words:

All that remains is to find the marked queries, right-click on them and select “Delete marked lines”:

We are not interested in comrades who want air conditioners for free :)

You don’t even have to look for an example of a marked query, but immediately right-click on any query, even one that is not marked, and select “Delete marked lines.”

I also actively use city names as stop words. For example, I need to collect requests only for Moscow. Therefore, using stop words with city names will prevent you from collecting queries that contain the names of other cities.

Here are some examples of such stop words:

Saint

Peter

Peter

All these words will allow you to avoid collecting queries containing various options names of St. Petersburg. As in the previous example, I use abbreviated versions of city names.

I also advise using numbers from previous years as stop words, since almost no one will type queries with them:

I will share with you my list of stop words, which contains:

- cities of Russia

- cities of Ukraine

- cities of Belarus

- cities of Kazakhstan

And also my list of common stop words (free, download, abstract, pdf, etc.).

Anyone can obtain a complete list of stop words absolutely free of charge.

I use this method very actively. In any topic there will be queries that cannot be removed using the same stop words or groups of words.

For example, stop words do not take into account the variety of word forms that can exist.

Let's say your company sells air conditioners. However, it does not provide services such as refueling and repairs.

When viewing queries, you can send inappropriate words to the list of stop words using the indicated icon:

![]()

But this will not cover queries that contain the words “refill”, “refueling”, etc.

In order to use the full range of similar requests that you want to delete, and save yourself from unnecessary work, do the following.

When viewing the list of queries, some words will not be covered, as in the example above.

I open text file and I enter into it only part of the word “refueling” in order to cover all possible word forms based on it:

As a result, I will get a list of search queries with all possible options words "refueling":

To reset the quick filter, click on the indicated checkbox:

This method allows you to directly delete all word forms of queries that do not suit you while working. The main thing is to use shortened versions of words for maximum coverage.

In many topics, some methods of collecting keywords from sources such as search suggestions end up yielding a lot of garbage queries. You also need to use the tooltips, they contain great keywords, but you also need to clean them up.

For quick cleaning For such requests, it makes sense to use this method.

Click on the indicated icon at the top of the “Source” column:

After that, select the desired source. I usually work with suggestions from different search engines:

You can work with the prompts of each search engine separately, or you can add a condition:

Use “OR” instead of “AND” and select several sources of hints at once:

As a result, you will receive a list of queries from search suggestions from several sources at once - Yandex, Google, etc.

From my own experience, I can say that cleaning queries using such a list based on sources is much faster and more efficient.

Everyone knows this method. It consists of simply selecting one or more queries with a checkmark, right-clicking and selecting “Delete selected lines”:

This is the method I use at the final stage. After all the cleaning, you need to review all the requests again and manually delete those that are not suitable, but passed all the previous filters.

So to speak, this is the final “polishing” of the semantic core :)

And I use Key Collector for this, I suggest using a database of stop words to clean up garbage. For everyone else, I recommend contacting me and, and then you won’t have to suffer, sort out and group thousands of phrases, I’ll do it all for you :)

Stop word databases for Key Collector

I collected this database from scraps and fragments of safe words for Key Collector, which can be found on the Internet. In my opinion this is the most full list all the negative keywords that exist today, so I strongly recommend using it to cleanse the semantic core.

- List of stop words for KeyCollector for all cities of Russia, Ukraine and Belarus.

- Lists of negative keywords for filtering: XXX topics, “Do it yourself”, repair, humor, etc.

- List of male and female names.

- Safe words for Kay Collector broken down by topic (!) – there really aren’t many topics, but still.

These databases are really enough to clear 95% of the garbage that is encountered when collecting semantics, but you still have to work with your hands anyway. However, by using these safewords, I began to save hours of time cleaning kernels, which used to be a downright pain!

I started writing this article quite a long time ago, but just before publication it turned out that my colleagues in the profession were ahead of me and posted almost identical material.

At first, I decided that I would not publish my article, since the topic was already well covered by more experienced colleagues. Mikhail Shakin spoke about 9 ways to clear requests in KC, and Igor Bakalov filmed a video about the analysis of implicit duplicates. However, after some time, having weighed all the pros and cons, I came to the conclusion that perhaps my article has the right to life and may be useful to someone - do not judge strictly.

If you need to filter a large database of keywords consisting of 200k or 2 million queries, then this article can help you. If you work with small semantic cores, then most likely the article will not be particularly useful for you.

We will consider filtering a large semantic core using the example of a sample consisting of 1 million queries on a legal topic.

What do we need?

- Key Collector (hereinafter referred to as KC)

- Minimum 8GB random access memory(otherwise we will face hellish brakes, spoiled mood, hatred, anger and rivers of blood in the eye capillaries)

- General Stop words

- Basic knowledge of regular expression language

If you are completely new to this business and are not best friends with KC, then I strongly recommend that you familiarize yourself with the internal functionality described on the official pages of the site. Many questions will disappear on their own, and you will also understand a little about the regular schedule.

So, we have a large database of keys that need to be filtered. You can get the database through independent parsing, as well as from various sources, but that’s not what we’re talking about today.

Everything that will be described below is relevant based on the example of one specific niche and is not an axiom! In other niches, some of the actions and stages may differ significantly! I do not pretend to be a Semantics Guru, but only share my thoughts, findings and considerations on this matter.

Step 1. Remove Latin characters

We delete all phrases that contain Latin characters. Typically, such phrases have negligible frequency (if any) and are either erroneous or irrelevant.

All manipulations with selections by phrases are done through this treasured button

If you took the millionth nucleus and reached this step, then here the eye capillaries may begin to burst, because. on weak computers/laptops, any manipulations with large symbols can, should and will be ungodly slow.

Select/mark all phrases and delete.

Step 2. Remove special. Symbols

The operation is similar to removing Latin characters (you can do both at once), however, I recommend doing everything step by step and viewing the results with your eyes, and not “chopping off the shoulder,” because sometimes even in a niche that you seem to know everything about, there are tasty queries that may fall under the filter and which you simply might not know about.

A little advice, if you have a lot of good phrases in your sample, but with a comma or another character, just add this character to the exceptions and that’s it.

Another option (the samurai way)

- Download all the necessary phrases with special characters

- Remove them in KC

- In any text editor replace this character with a space

- Download it back.

Now the little phrases are clean, their reputation has been whitened and a special selection has been made. symbols will not affect them.

Step 3. Remove repetitions of words

And again we will use the functionality built into KC by applying the rule

There is nothing to add here - everything is simple. We kill garbage without a doubt.

If you are faced with the task of performing strict filtering and removing as much garbage as possible, while sacrificing some share of good queries, then you can combine all 3 first steps into one.

It will look like this:

IMPORTANT: Don't forget to switch "AND" to "OR"!

Step 4. Remove phrases consisting of 1 and 7+ words

Someone may object and talk about the coolness of one-word words, no problem - leave it, but in most cases, manual filtering of one-word words takes a lot of time, as a rule, the ratio of good/bad one-word words is 1/20, not in our favor. Yes, and to drive them into the TOP using the methods for which I collect such kernels from the category of science fiction. Therefore, with our hearts creaking, we send words to our forefathers.

I foresee the question of many, “why delete long phrases”? I answer, phrases consisting of 7 or more words for the most part have a spammy construction, do not have a frequency and in the general mass form a lot of duplicates, specifically thematic duplicates. I'll give an example to make it clearer.

In addition, the frequency of such questions is so low that often server space is more expensive than the exhaust from such requests. In addition, if you look at the TOPs for long phrases, you will not find direct occurrences either in the text or in the tags, so using such long phrases in our SL does not make sense.

Step 5: Clearing up implicit duplicates

We set up the cleaning in advance, adding our own phrases, indicating a link to my list, if you have something to add - write, we will strive for perfection together.

If we don’t do this and use the list kindly provided and entered into the program by default by the creators of KC, then these are the results we will have in the list, and these are, in fact, very duplicates.

We can perform smart grouping, but in order for it to work correctly, we need to remove the frequency. And this, in our case, is not an option. Because Remove frequency from 1 million. keys, even with 100k - you will need a pack of private proxies, anti-captcha and a lot of time. Because Even 20 proxies are not enough - within an hour a captcha will start appearing, no matter how you look at it. And this matter will take a lot of time; by the way, the anti-captcha budget will also eat up quite a bit. And why even remove the frequency from garbage phrases that can be filtered out without much effort?

If you still want to filter phrases with smart grouping, removing frequencies and gradually removing garbage, then I will not describe the process in detail - watch the video that I linked to at the very beginning of the article.

Here are my cleaning settings and steps:

Step 6. Filter by stop words

In my opinion, this is the most dreary point, drink tea, smoke a cigarette (this is not a call, it’s better to quit smoking and eat a cookie) and with fresh energy sit down to filter the semantic core using stop words.

There is no need to reinvent the wheel and start compiling lists of safe words from scratch. Eat ready-made solutions. In particular, here is more than enough for you as a basis.

I advise you to copy the sign to your own PC, otherwise what if the Shestakov brothers decide to keep “your charm” for themselves and close access to the file? As the saying goes, “Just because you’re paranoid doesn’t mean you’re not being watched...”

Personally, I ungrouped stop words into separate files for certain tasks, an example in the screenshot.

The “General List” file contains all stop words at once. In Key Collector, open the stop words interface and load the list from the file.

I check the partial entry and check the box “Look for matches only at the beginning of words.” These settings are especially relevant when there is a huge volume of stop words because many words consist of 3-4 characters. And if you set other settings, you can easily filter out a lot of useful and necessary words.

If we do not check the above box, then the vulgar stop word “fuck” will be found in phrases such as “state insurance consultation”, “how to insure deposits”, etc. and so on. Here’s another example, the word “RB” (Republic of Belarus) will indicate a huge number of phrases, such as “compensation for damages, consultation”, “bringing a claim in arbitration proceedings”, etc. and so on.

In other words - We want the program to highlight only phrases where stop words occur at the beginning of words. The wording hurts the ear, but you can’t remove the words from the song.

I would like to note separately that this setting leads to a significant increase in the time it takes to check stop words. With a large list, the process can take 10 or 40 minutes, and all because of this checkbox, which increases the search time for 100-word words in phrases by ten or even more times. However, this is the most adequate filtering option when working with a large semantic core.

After we've gone through the basic list, I recommend checking with your eyes to see if any of the necessary phrases were included in the distribution, and I'm sure it will be so, because general lists of basic stop words are not universal and have to be worked on separately for each niche. This is where “dancing with a tambourine” begins.

We leave only the selected stop words in the working window, this is done like this.

Then click on “group analysis”, select the “by individual words” mode and see what is superfluous in our list due to inappropriate stop words.

We remove inappropriate stop words and repeat the cycle. Thus, after some time we will “tailor” the universal public list to our needs. But that is not all.

Now we need to select stop words that are found specifically in our database. When it comes to huge databases of keywords, there is always some kind of “branded garbage”, as I call it. Moreover, this can be a completely unexpected set of nonsense and you have to get rid of it individually.

In order to solve this problem, we will again resort to the Group Analysis functionality, but this time we will go through all the phrases remaining in the database after previous manipulations. We will sort by the number of phrases and with our eyes, yes, yes, yes, with our hands and eyes, we will look through all the phrases, up to 30-50 in a group. I mean the second column “number of phrases in the group”.

I hasten to warn the faint of heart, the seemingly endless scroll slider” will not force you to spend a week on filtering, scroll it by 10% and you will already reach groups that contain no more than 30 queries, and such filtering should only be done by those who know a lot about in perversions.

Directly from the same window we can add all the garbage to the stop word (the shield icon to the left of the selectbox).

Instead of adding all these stop words (and there are many more, I just didn’t want to add a vertically long screenshot), we gracefully add the root “filter” and immediately cut off all the variations. As a result, our stop word lists will not grow to enormous sizes and, most importantly, we Let's not waste extra time searching for them. And for large volumes, this is very important.

Step 7. Remove 1 and 2 character “words”

I can't find an exact definition for this type combinations of symbols, so I called them “words”. Perhaps someone who reads the article will tell you what term fits better, and I'll replace it. That's how tongue-tied I am.

Many will ask, “why do this at all”? The answer is simple, very often in such arrays of keywords there is garbage of the type:

A common feature of such phrases is 1 or 2 characters that have no meaning (the screenshot shows an example with 1 character). This is what we will filter. There are pitfalls here, but first things first.

How to remove all words consisting of 2 characters?

To do this we use the regular sequence

Extra tip: Always save your regular schedule templates! They are saved not within the project, but within the framework KC in general. So they will always be at hand.

(^|\s+)(..)(\s+|$) or (^|\s)(1,2)(\s|$)

(st | federal law | uk | on | rf | whether | by | st | not | un | to | from | for | by | from | about)

Here is my version, customize it to suit your needs.

The second line is exceptions; if you do not enter them, then all phrases containing combinations of characters from the second line of the formula will be included in the list of candidates for deletion.

The third line excludes phrases at the end of which “рф” appears, because often these are normal, useful phrases.

Separately, I would like to clarify that the option (^|\s+)(..)(\s+|$) will highlight everything – including numerical values. While the regular expression (^|\s)(1,2)(\s|$) will only affect alphabetic ones, special thanks to Igor Bakalov for it.

We apply our design and remove garbage phrases.

How to remove all words consisting of 1 character?

Here everything is somewhat more interesting and not so clear.

At first I tried to apply and modernize the previous option, but as a result it was not possible to mow out all the garbage, nevertheless, this particular scheme will suit many people, try it.

(^|\s+)(.)(\s+|$)

(with | in | and | i | to | y | o)

Traditionally, the first line is the regular one, the second line is the exceptions, the third line excludes those phrases in which the listed characters occur at the beginning of the phrase. Well, that’s logical, because they are not preceded by a space, therefore, the second line will not exclude their presence in the sample.

But here is the second option, with which I delete all phrases with one-character garbage, simple and merciless, which in my case helped me get rid of a very large volume of left-handed phrases.

(j | c | e | n | g | w | )

I excluded from the sample all phrases where “Moscow” appears, because there were a lot of phrases like:

but I need it, you can guess why.