To convert the light flux into an electronic signal, which is then converted into a digital code recorded on the camera’s memory card.

The matrix consists of pixels, the purpose of each is to output an electronic signal corresponding to the amount of light falling on it.

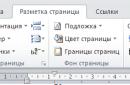

The difference in CCD and CMOS matrices is conversion technique

signal received from the pixel. In the case of a CCD - sequentially and with a minimum of noise, in the case of CMOS - quickly and with less power consumption (and thanks to additional circuits, the amount of noise is significantly reduced).

However, first things first...

There are CCD and CMOS matrices

|

CCD matrix

A charge-coupled device (CCD) is so named because of the way charge is transferred between photosensitive elements - from pixel to pixel and ultimately removing charge from the sensor .

Charges are shifted along the matrix in lines from top to bottom. Thus, the charge moves down the lines of many registers (columns) at once.

Before leaving the CCD sensor, the charge of each pixel is amplified, and the output is an analog signal with a different voltage (depending on the amount of light hitting the pixel). Before processing this signal is sent to separate

(off-chip) analog-to-digital converter, and the resulting digital data is converted into bytes representing a line of the image captured by the sensor.

Since the CCD delivers an electrical charge that has low resistance and is less susceptible to interference from other electronic components, the resulting signal typically contains less noise compared to the signal from CMOS sensors.

|

|

CMOS matrix

IN CMOS matrix (CMOS - complementary metal - oxide semiconductor, in English - CMOS), the processing device is located next to every pixel (sometimes mounted on the matrix itself), due to which it increases performance systems. Also, due to the lack of additional processing devices, we note low level energy consumption CMOS matrices.

Some idea of the process of reading information from matrices can be obtained from the following video

Technologies are constantly being improved, and today the presence of a CMOS matrix in a camera or video camera indicates a higher class model. Manufacturers often focus on models with CMOS matrices.

Recently, the development of a CMOS matrix with rear placement of conductors has been popular, showing better results when shooting in low light conditions, and also having a lower noise level.

Currently, most image capture systems use CCD (charge-coupled device) matrices as the photosensitive device.

The operating principle of a CCD matrix is as follows: a matrix of photosensitive elements (accumulation section) is created on the basis of silicon. Each photosensitive element has the property of accumulating charges proportional to the number of photons hitting it. Thus, over some time (exposure time) in the accumulation section, a two-dimensional matrix of charges proportional to the brightness of the original image is obtained. The accumulated charges are initially transferred to the storage section, and then line by line and pixel by pixel to the output of the matrix.

The size of the storage section in relation to the accumulation section varies:

- per frame (matrices with frame transfer for progressive scan);

- per half-frame (matrices with frame transfer for interlaced scanning);

There are also matrices in which there is no storage section, and then line transfer is carried out directly through the accumulation section. Obviously, for such matrices to work, an optical shutter is required.

The quality of modern CCD matrices is such that the charge remains virtually unchanged during the transfer process.

Despite the apparent variety of television cameras, the CCD matrices used in them are practically the same, since mass and large-scale production of CCD matrices is carried out by only a few companies. These are SONY, Panasonic, Samsung, Philips, Hitachi Kodak.

The main parameters of CCD matrices are:

- dimension in pixels;

- physical size in inches (2/3, 1/2, 1/3, etc.). Moreover, the numbers themselves do not determine the exact size of the sensitive area, but rather determine the class of the device;

- sensitivity.

Resolution of CCD cameras.

The resolution of CCD cameras is mainly determined by the size of the CCD matrix in pixels and the quality of the lens. To some extent, this can be influenced by the camera’s electronics (if it’s poorly made, it can worsen the resolution, but they rarely do anything frankly bad these days).

It is important to make one note here. In some cases, high-frequency spatial filters are installed in cameras to improve apparent resolution. In this case, an image of an object obtained from a smaller camera may appear even sharper than an image of the same object obtained objectively from a better camera. Of course, this is acceptable when the camera is used in visual surveillance systems, but it is completely unsuitable for constructing measurement systems.

Resolution and format of CCD matrices.

Currently, various companies produce CCD matrices covering a wide range of dimensions from several hundred to several thousand. This is how a matrix with a dimension of 10000x10000 was reported, and this message noted not so much the problem of the cost of this matrix as the problem of storing, processing and transmitting the resulting images. As we know, matrices with dimensions up to 2000x2000 are now more or less widely used.

The most widely, or more precisely, mass-used CCD matrices certainly include matrices with a resolution oriented to the television standard. These are matrices mainly of two formats:

- 512*576;

- 768*576.

Matrices 768*576 (sometimes a little more, sometimes a little less) allow you to get the maximum resolution for a standard television signal. At the same time, unlike matrices of the 512*576 format, they have a grid arrangement of photosensitive elements close to a square, and, therefore, equal horizontal and vertical resolution.

Often, camera manufacturers indicate resolution in television lines. This means that the camera allows you to see N/2 dark vertical strokes on a light background, arranged in a square inscribed in the image field, where N is the declared number of television lines. In relation to a standard television table, this assumes the following: by selecting the distance and focusing the table image, it is necessary to ensure that the upper and lower edges of the table image on the monitor coincide with the outer contour of the table, marked by the vertices of black and white prisms; then, after final subfocusing, the number is read in the place of the vertical wedge where the vertical strokes for the first time cease to be resolved. The last remark is very important because... and in the image of test fields of a table with 600 or more lines, alternating stripes are often visible, which, in fact, are moiré formed by the beating of the spatial frequencies of the lines of the table and the grid of sensitive elements of the CCD matrix. This effect is especially pronounced in cameras with high-frequency spatial filters (see above)!

I would like to note that, all other things being equal (this can mainly be influenced by the lens), the resolution of black-and-white cameras is uniquely determined by the size of the CCD matrix. So a 768*576 format camera will have a resolution of 576 television lines, although in some prospectuses you can find a value of 550, and in others 600.

Lens.

The physical size of the CCD cells is the main parameter that determines the requirement for the resolution of the lens. Another such parameter may be the requirement to ensure the operation of the matrix under light overload conditions, which will be discussed below.

For a 1/2 inch SONY ICX039 matrix, the pixel size is 8.6µm*8.3µm. Therefore, the lens must have a resolution better than:

1/8.3*10e-3= 120 lines (60 pairs of lines per millimeter).

For lenses made for 1/3-inch matrices, this value should be even higher, although this, oddly enough, does not affect the cost and such a parameter as aperture, since these lenses are made taking into account the need to form an image on a smaller light-sensitive field of the matrix. It also follows that lenses for smaller matrices are not suitable for large matrices due to significantly deteriorating characteristics at the edges of large matrices. At the same time, lenses for large sensors can limit the resolution of images obtained from smaller sensors.

Unfortunately, with all the modern abundance of lenses for television cameras, it is very difficult to obtain information on their resolution.

In general, we do not often select lenses, since almost all of our Customers install video systems on existing optics: microscopes, telescopes, etc., so our information about the lens market is in the nature of notes. We can only say that the resolution of simple and cheap lenses is in the range of 50-60 pairs of lines per mm, which is generally not enough.

On the other hand, we have information that special lenses produced by Zeiss with a resolution of 100-120 line pairs per mm cost more than $1000.

So, when purchasing a lens, it is necessary to conduct preliminary testing. I must say that most Moscow sellers provide lenses for testing. Here it is once again appropriate to recall the moire effect, the presence of which, as noted above, can mislead regarding the resolution of the matrix. So, the presence of moire in the image of sections of the table with strokes above 600 television lines in relation to the lens indicates a certain reserve of the latter’s resolution, which, of course, does not hurt.

One more thing maybe important note for those interested in geometric measurements. All lenses have distortion to one degree or another (pincushion-shaped distortion of the image geometry), and the shorter the lens, the greater these distortions, as a rule, are. In our opinion, lenses with focal lengths greater than 8-12 mm have acceptable distortion for 1/3" and 1/2" cameras. Although the level of “acceptability”, of course, depends on the tasks that the television camera must solve.

Resolution of image input controllers

The resolution of image input controllers should be understood as the conversion frequency of the analog-to-digital converter (ADC) of the controller, the data of which is then recorded in the controller’s memory. Obviously, there is a reasonable limit to increasing the digitization frequency. For devices that have a continuous structure of the photosensitive layer, for example, vidicons, the optimal digitization frequency is equal to twice the upper frequency of the useful signal of the vidicon.

Unlike such light detectors, CCD matrices have a discrete topology, so the optimal digitization frequency for them is determined as the shift frequency of the output register of the matrix. In this case, it is important that the controller’s ADC operates synchronously with the output register of the CCD matrix. Only in this case can the best conversion quality be achieved both from the point of view of ensuring a “rigid” geometry of the resulting images and from the point of view of minimizing noise from clock pulses and transient processes.

Sensitivity of CCD cameras

Since 1994, we have been using SONY card cameras in our devices based on the ICX039 CCD matrix. The SONY description for this device indicates a sensitivity of 0.25 lux on an object with a lens aperture of 1.4. Several times already, we have come across cameras with similar parameters (size 1/2 inch, resolution 752*576) and with a declared sensitivity of 10 or even 100 times greater than that of “our” SONY.

We checked these numbers several times. In most cases, in cameras from different companies, we found the same ICX039 CCD matrix. Moreover, all the “piping” microcircuits were also SONY-made. And comparative testing showed almost complete identity of all these cameras. So what's the question?

And the whole question is at what signal-to-noise ratio (s/n) the sensitivity is determined. In our case SONY company conscientiously showed sensitivity at s/n = 46 dB, while other companies either did not indicate this or indicated it in such a way that it is unclear under what conditions these measurements were made.

This is, in general, a common scourge of most camera manufacturers - not specifying the conditions for measuring camera parameters.

The fact is that as the requirement for the S/N ratio decreases, the sensitivity of the camera increases in inverse proportion to the square of the required S/N ratio:

Where:

I - sensitivity;

K - conversion factor;

s/n - s/n ratio in linear units,

Therefore, many companies are tempted to indicate camera sensitivity at a low S/N ratio.

We can say that the ability of matrices to “see” better or worse is determined by the number of charges converted from photons incident on its surface and the quality of delivery of these charges to the output. The amount of accumulated charges depends on the area of the photosensitive element and the quantum efficiency of the CCD matrix, and the quality of transportation is determined by many factors, which often come down to one thing - readout noise. The readout noise for modern matrices is on the order of 10-30 electrons or even less!

The areas of the elements of CCD matrices are different, but the typical value for 1/2 inch matrices for television cameras is 8.5 µm * 8.5 µm. An increase in the size of the elements leads to an increase in the size of the matrices themselves, which increases their cost not so much due to the actual increase in the production price, but due to the fact that the serial production of such devices is several orders of magnitude smaller. In addition, the area of the photosensitive zone is affected by the topology of the matrix to the extent that the percentage of the total surface of the crystal is occupied by the sensitive area (fill factor). In some special matrices, the fill factor is stated to be 100%.

Quantum efficiency (how much on average the charge of a sensitive cell in electrons changes when one photon falls on its surface) for modern matrices is 0.4-0.6 (for some matrices without anti-blooming it reaches 0.85).

Thus, it can be seen that the sensitivity of CCD cameras, related to a certain S/N value, has come close to the physical limit. According to our conclusion, typical sensitivity values of cameras for general use at s/w = 46 lie in the range of 0.15-0.25 lux of illumination on the object with a lens aperture of 1.4.

In this regard, we do not recommend blindly trusting the sensitivity figures indicated in the descriptions of television cameras, especially when the conditions for determining this parameter are not given and, if you see in the passport of a camera costing up to $500 a sensitivity of 0.01-0.001 lux in television mode, then before you are an example of, to put it mildly, incorrect information.

About ways to increase the sensitivity of CCD cameras

What do you do if you need to image a very faint object, such as a distant galaxy?One way to solve this is to accumulate images over time. The implementation of this method can significantly increase the sensitivity of the CCD. Of course, this method can be applied to stationary objects of observation or in cases where movement can be compensated, as is done in astronomy.

Fig1 Planetary nebula M57.

Telescope: 60 cm, exposure - 20 sec., temperature during exposure - 20 C.

At the center of the nebula there is a stellar object of magnitude 15.

The image was obtained by V. Amirkhanyan at the Special Astrophysical Observatory of the Russian Academy of Sciences.

It can be stated with reasonable accuracy that the sensitivity of CCD cameras is directly proportional to the exposure time.

For example, sensitivity at a shutter speed of 1 second relative to the original 1/50s will increase 50 times, i.e. it will be better - 0.005 lux.

Of course, there are problems along this path, and this is, first of all, the dark current of the matrices, which brings charges that accumulate simultaneously with the useful signal. The dark current is determined, firstly, by the manufacturing technology of the crystal, secondly, by the level of technology and, of course, to a very large extent by the operating temperature of the matrix itself.

Usually, to achieve long accumulation times, on the order of minutes or tens of minutes, the matrices are cooled to minus 20-40 degrees. C. The problem of cooling the matrices to such temperatures has been solved, but it is simply impossible to say that this cannot be done, since there are always design and operational problems associated with fogging of protective glasses and heat release from the hot junction of a thermoelectric refrigerator.

At the same time, technological progress in the production of CCD matrices has also affected such a parameter as dark current. Here the achievements are very significant and the dark current of some good modern matrices is very small. In our experience, cameras without cooling allow making exposures at room temperature within tens of seconds, and with dark background compensation up to several minutes. As an example, here is a photograph of the planetary nebula M57, obtained with the VS-a-tandem-56/2 video system without cooling with an exposure of 20 s.

The second way to increase sensitivity is the use of electron-optical converters (EOC). Image intensifiers are devices that enhance the luminous flux. Modern image intensifiers can have very large gain values, however, without going into details, we can say that the use of image intensifiers can only improve the threshold sensitivity of the camera, and therefore its gain should not be made too large.

Spectral sensitivity of CCD cameras

Fig.2 Spectral characteristics of various matrices

For some applications, the spectral sensitivity of the CCD is an important factor. Since all CCDs are made on the basis of silicon, in their “bare” form the spectral sensitivity of the CCD corresponds to this parameter of silicon (see Fig. 2).

As you can see, with all the variety of characteristics, CCD matrices have maximum sensitivity in the red and near-infrared (IR) range and see absolutely nothing in the blue-violet part of the spectrum. The near-IR sensitivity of CCDs is used in covert surveillance systems illuminated by IR light sources, as well as when measuring thermal fields of high-temperature objects.

Rice. 3 Typical spectral characteristics of SONY black-and-white matrices.

SONY produces all its black-and-white matrices with the following spectral characteristics (see Fig. 3). As you can see from this figure, the sensitivity of the CCD in the near IR is significantly reduced, but the matrix began to perceive the blue region of the spectrum.

For various special purposes, matrices sensitive in the ultraviolet and even X-ray range are being developed. Usually these devices are unique and their price is quite high.

About progressive and interlaced scanning

The standard television signal was developed for a broadcast television system, and from the point of view of modern image input and processing systems, it has one big drawback. Although the TV signal contains 625 lines (of which about 576 contain video information), 2 half-frames are displayed sequentially, consisting of even lines (even half-frame) and odd lines (odd half-frame). This leads to the fact that if a moving image is input, then the Y resolution cannot be used in analysis more than the number of lines in one half-frame (288). In addition, in modern systems, when the image is visualized on a computer monitor (which has progressive scan), the image input from the interlaced camera when the object is moving causes an unpleasant visual effect of doubling.

All methods to combat this shortcoming lead to a deterioration in vertical resolution. The only way to overcome this disadvantage and achieve resolution that matches the resolution of the CCD is to switch to progressive scanning in the CCD. CCD manufacturers produce such matrices, but due to the low production volume, the price of such matrices and cameras is much higher than that of conventional ones. For example, the price of a SONY matrix with progressive scan ICX074 is 3 times higher than ICX039 (interlace scan).

Other camera options

These others include such a parameter as “blooming”, i.e. spreading of the charge over the surface of the matrix when its individual elements are overexposed. In practice, such a case may occur, for example, when observing objects with glare. This is a rather unpleasant effect of CCDs, since a few bright spots can distort the entire image. Fortunately, many modern matrices contain anti-blooming devices. So, in the descriptions of some of the latest SONY matrices, we found 2000, which characterizes the permissible light overload of individual cells, which does not yet lead to charge spreading. This is a fairly high value, especially since this result can be achieved, as our experience has shown, only with special adjustment of the drivers that directly control the matrix and the video signal pre-amplification channel. In addition, the lens also makes its contribution to the “spreading” of bright points, since with such large light overloads, even small scattering beyond the main spot provides a noticeable light support for neighboring elements.

It is also necessary to note here that according to some data, which we have not verified ourselves, matrices with anti-blooming have a 2-fold lower quantum efficiency than matrices without anti-blooming. In this regard, in systems that require very high sensitivity, it may make sense to use matrices without anti-blooming (usually these are special tasks such as astronomical ones).

About color cameras

The materials in this section somewhat go beyond the scope of consideration of measuring systems that we have established, however, the widespread use of color cameras (even more than black and white) forces us to clarify this issue, especially since Customers often try to use black and white cameras with our cameras. color television cameras with white frame grabbers, and they are very surprised when they find some stains in the resulting images, and the resolution of the images turns out to be insufficient. Let's explain what's going on here.

There are 2 ways to generate a color signal:

- 1. use of a single matrix camera.

- 2. use of a system of 3 CCD matrices with a color separation head to obtain R, G, B components of the color signal on these matrices.

The second way provides the best quality and is the only way to obtain measurement systems; however, cameras operating on this principle are quite expensive (more than $3000).

In most cases, single-chip CCD cameras are used. Let's look at their operating principle.

As is clear from the fairly wide spectral characteristics of the CCD matrix, it cannot determine the “color” of a photon hitting the surface. Therefore, in order to enter a color image, a light filter is installed in front of each element of the CCD matrix. In this case, the total number of matrix elements remains the same. SONY, for example, produces exactly the same CCD matrices for black-and-white and color versions, which differ only in the presence of a grid of light filters in the color matrix, applied directly to the sensitive areas. There are several matrix coloring schemes. Here is one of them.

Here 4 different filters are used (see Fig. 4 and Fig. 5).

Figure 4. Distribution of filters on CCD matrix elements

Figure 5. Spectral sensitivity of CCD elements with various filters.

Y=(Cy+G)+(Ye+Mg)

In line A1 the "red" color difference signal is obtained as:

R-Y=(Mg+Ye)-(G+Cy)

and in line A2 a “blue” color difference signal is obtained:

-(B-Y)=(G+Ye)-(Mg+Cy)

It is clear from this that the spatial resolution of a color CCD matrix, compared to the same black and white one, is usually 1.3-1.5 times worse horizontally and vertically. Due to the use of filters, the sensitivity of a color CCD is also worse than that of a black and white one. Thus, we can say that if you have a single-matrix receiver 1000 * 800, then you can actually get about 700 * 550 for the brightness signal and 500 * 400 (700 * 400 is possible) for the color signal.

Leaving aside technical issues, I would like to note that for advertising purposes, many manufacturers of electronic cameras report completely incomprehensible data on their equipment. For example, the Kodak company announces the resolution of its DC120 electronic camera as 1200*1000 with a matrix of 850x984 pixels. But gentlemen, information does not appear out of nowhere, although visually it looks good!

The spatial resolution of a color signal (a signal that carries information about the color of the image) can be said to be at least 2 times worse than the resolution of a black-and-white signal. In addition, the “calculated” color of the output pixel is not the color of the corresponding element of the source image, but only the result of processing the brightness of various elements of the source image. Roughly speaking, due to the sharp difference in brightness of neighboring elements of an object, a color that is not there at all can be calculated, while a slight camera shift will lead to a sharp change in the output color. For example: the border of a dark and light gray field will look like it consists of multi-colored squares.

All these considerations relate only to the physical principle of obtaining information on color CCD matrices, while it must be taken into account that usually the video signal at the output of color cameras is presented in one of the standard formats PAL, NTSC, or less often S-video.

The PAL and NTSC formats are good because they can be immediately reproduced on standard monitors with a video input, but we must remember that these standards provide a significantly narrower band for the color signal, so it is more correct to talk about a colored image rather than a color one. Another unpleasant feature of cameras with video signals that carry a color component is the appearance of the above-mentioned streaks in the image obtained by black-and-white frame grabbers. And the point here is that the chrominance signal is located almost in the middle of the video signal band, creating interference when entering an image frame. We do not see this interference on a television monitor because the phase of this “interference” is reversed after four frames and averaged by the eye. Hence the bewilderment of the Customer, who receives an image with interference that he does not see.

It follows from this that if you need to carry out some measurements or decipher objects by color, then this issue must be approached taking into account both the above and other features of your task.

About CMOS matrices

In the world of electronics, everything is changing very quickly, and although the field of photodetectors is one of the most conservative, new technologies have been approaching here recently. First of all, this relates to the emergence of CMOS television matrices.

Indeed, silicon is a light-sensitive element and any semiconductor product can be used as a sensor. The use of CMOS technology provides several obvious advantages over traditional technology.

Firstly, CMOS technology is well mastered and allows the production of elements with a high yield of useful products.

Secondly, CMOS technology allows you to place on the matrix, in addition to the photosensitive area and various devices frames (up to the ADC), which were previously installed “outside”. This makes it possible to produce cameras with digital output “on a single chip.”

Thanks to these advantages, it becomes possible to produce significantly cheaper television cameras. In addition, the range of companies producing matrices is expanding significantly.

At the moment, the production of television matrices and cameras using CMOS technology is just getting started. Information about the parameters of such devices is very scarce. We can only note that the parameters of these matrices do not exceed what is currently achieved; as for the price, their advantages are undeniable.

Let me give as an example a single-chip color camera from Photobit PB-159. The camera is made on a single chip and has the following technical parameters:

- resolution - 512*384;

- pixel size - 7.9µm*7.9µm;

- sensitivity - 1 lux;

- output - digital 8-bit SRGB;

- body - 44 PLCC legs.

Thus, the camera loses four times in sensitivity, in addition, from information on another camera it is clear that this technology has problems with a relatively large dark current.

About digital cameras

IN lately A new market segment has appeared and is rapidly growing, using CCD and CMOS matrices - digital cameras. Moreover, at the present moment there is a sharp increase in the quality of these products simultaneously with a sharp decrease in price. Indeed, just 2 years ago, a matrix with a resolution of 1024*1024 alone cost about $3000-7000, but now cameras with such matrices and a bunch of bells and whistles (LCD screen, memory, vari-lens, convenient body, etc.) can be bought for less than $1000 . This can only be explained by the transition to large-scale production of matrices.

Since these cameras are based on CCD and CMOS matrices, all the discussions in this article about sensitivity and the principles of color signal formation are valid for them.

Instead of a conclusion

The practical experience we have accumulated allows us to draw the following conclusions:

- The production technology of CCD matrices in terms of sensitivity and noise is very close to physical limits;

- on the television camera market you can find cameras of acceptable quality, although adjustments may be required to achieve higher parameters;

- Do not be fooled by the high sensitivity figures given in camera brochures;

- And yet, prices for cameras that are absolutely identical in quality and even for simply identical cameras from different sellers can differ by more than twice!

Vendors now offer a huge selection of video surveillance cameras. The models differ not only in the parameters common to all cameras - focal length, viewing angle, light sensitivity, etc. - but also in various proprietary features that each manufacturer strives to equip their devices with.

Therefore, often short description characteristics of a video surveillance camera is a frightening list of incomprehensible terms, for example: 1/2.8" 2.4MP CMOS, 25/30fps, OSD Menu, DWDR, ICR, AWB, AGC, BLC, 3DNR, Smart IR, IP67, 0.05 Lux and that's not all.

In the previous article, we focused on video standards and the classification of cameras depending on them. Today we will analyze the main characteristics of video surveillance cameras and deciphering the symbols of special technologies used to improve the quality of the video signal:

- Focal length and viewing angle

- Aperture (F number) or lens aperture

- Adjusting the iris (auto iris)

- Electronic shutter (AES, shutter speed, shutter speed)

- Sensitivity (light sensitivity, minimum illumination)

- Protection classes IK (Vandal-proof, anti-vandal) and IP (from moisture and dust)

Matrix type (CCD CCD, CMOS CMOS)

There are 2 types of CCTV camera matrices: CCD (in Russian - CCD) and CMOS (in Russian - CMOS). They differ in both structure and principle of operation.

| CCD | CMOS |

| Sequential reading from all matrix cells | Random reading from matrix cells, which reduces the risk of smearing - the appearance of vertical smearing of point light sources (lamps, lanterns) |

| Low noise level | High noise level due to so-called tempo currents |

| High dynamic sensitivity (more suitable for shooting moving objects) | “Rolling shutter” effect - when shooting fast moving objects, horizontal stripes and image distortion may occur |

| The crystal is used only to accommodate photosensitive elements; the remaining microcircuits must be placed separately, which increases the size and cost of the camera | All chips can be placed on a single chip, making production of CMOS cameras simple and inexpensive |

| By using the matrix area only for photosensitive elements, the efficiency of its use increases - it approaches 100% | Low power consumption (almost 100 times less than CCD matrices) |

| Expensive and complex production | Performance |

For a long time it was believed that the CCD matrix produces much higher quality images than CMOS. However, modern CMOS matrices are often practically in no way inferior to CCDs, especially if the requirements for the video surveillance system are not too high.

Matrix size

Indicates the diagonal size of the matrix in inches and is written as a fraction: 1/3", 1/2", 1/4", etc.

It is generally believed that the larger the matrix size, the better: less noise, clearer picture, larger viewing angle. However, in fact, the best image quality is provided not by the size of the matrix, but by the size of its individual cell or pixel - the larger it is, the better. Therefore, when choosing a video surveillance camera, you need to consider the matrix size along with the number of pixels.

If matrices with sizes 1/3" and 1/4" have the same number of pixels, then in this case a 1/3" matrix will naturally give a better image. But if it has more pixels, then you need to pick up a calculator and calculate the approximate pixel size.

For example, from the matrix cell size calculations below, you can see that in many cases the pixel size on a 1/4" matrix turns out to be larger than on a 1/3" matrix, which means a video image with 1/4", although it is smaller in size, it will be better.

| Matrix size | Number of pixels (millions) | Cell size (µm) |

| 1/6 | 0.8 | 2,30 |

| 1/3 | 3,1 | 2,35 |

| 1/3,4 | 2,2 | 2,30 |

| 1/3,6 | 2,1 | 2,40 |

| 1/3,4 | 2,23 | 2,45 |

| 1/4 | 1,55 | 2,50 |

| 1 / 4,7 | 1,07 | 2,50 |

| 1/4 | 1,33 | 2,70 |

| 1/4 | 1,2 | 2,80 |

| 1/6 | 0,54 | 2,84 |

| 1 / 3,6 | 1,33 | 3,00 |

| 1/3,8 | 1,02 | 3,30 |

| 1/4 | 0,8 | 3,50 |

| 1/4 | 0,45 | 4,60 |

Focal length and viewing angle

These parameters are of great importance when choosing a video surveillance camera, and they are closely related to each other. In fact, the focal length of a lens (often denoted f) is the distance between the lens and the sensor.

In practice, the focal length determines the camera's viewing angle and range:

- the shorter the focal length, the wider the viewing angle and the less detail can be seen on objects located in the distance;

- The longer the focal length, the narrower the viewing angle of the video camera and the more detailed the image of distant objects.

If you need a general overview of a certain area, and you want to use as few cameras as possible for this, buy a camera with a short focal length and, accordingly, a wide viewing angle.

But in those areas where detailed observation of a relatively small area is required, it is better to install a camera with an increased focal length, pointing it at the object of observation. This is often used at the checkout counters of supermarkets and banks, where you need to see the denomination of banknotes and other payment details, as well as at the entrance to parking lots and other areas where it is necessary to distinguish a license plate number over a long distance.

The most common focal length is 3.6 mm. It roughly corresponds to the viewing angle of the human eye. Cameras with this focal length are used for video surveillance in small spaces.

The table below contains information and relationships between focal length, viewing angle, recognition distance, etc. for the most common focuses. The numbers are approximate, as they depend not only on the focal length, but also on other parameters of the camera optics.

Depending on the width of the viewing angle, video surveillance cameras are usually divided into:

- conventional (viewing angle 30°-70°);

- wide-angle (viewing angle from approximately 70°);

- long-focus (viewing angle less than 30°).

The letter F, only usually capitalized, also denotes the lens aperture - therefore, when reading the characteristics, pay attention to the context in which the parameter is used.

Lens type

Fixed (monofocal) lens- the simplest and most inexpensive. The focal length is fixed and cannot be changed.

IN varifocal (variofocal) lenses you can change the focal length. Its setting is done manually, usually once when the camera is installed at the shooting location, and then as needed.

Transfactor or zoom lenses They also provide the ability to change the focal length, but remotely, at any time. The focal length is changed using an electric drive, which is why they are also called motorized lenses.

"Fisheye" (fisheye, fisheye) or panoramic lens allows you to install just one camera and achieve a 360° view.

Of course, the resulting image has a “bubble” effect - straight lines are curved, but in most cases, cameras with such lenses allow you to divide one general panoramic image into several separate ones, with adjustments for the perception familiar to the human eye.

Pinhole lenses allow for covert video surveillance due to its miniature size. In fact, a pinhole camera does not have a lens, but only a miniature hole instead. In Ukraine, the use of covert video surveillance is seriously limited, as is the sale of devices for it.

These are the most common lens types. But if we go deeper, lenses are also divided according to other parameters:

Aperture (F number) or lens aperture

Determines the camera's ability to capture high-quality images in low-light conditions. How larger number F, the smaller the aperture is and the more light the camera needs. The smaller the aperture, the wider the aperture is, and the camcorder can produce clear images even in low light.

The letter f (usually lowercase) also denotes the focal length, so when reading the characteristics, pay attention to the context in which the parameter is used. For example, in the picture above, the aperture is indicated by a small f.

Lens mount

There are 3 types of mounts for attaching a lens to a video camera: C, CS, M12.

- The C mount is rarely used anymore. C lenses can be mounted on a CS mount camera using a special ring.

- The CS mount is the most common type. CS lenses are not compatible with C cameras.

- The M12 mount is used for small lenses.

Iris adjustment (auto iris), ARD, ARD

The diaphragm is responsible for the flow of light onto the matrix: with an increased flow of light, it narrows, thus preventing the image from being overexposed, and in low light, on the contrary, it opens so that more light falls on the matrix.

There are two large groups of cameras: fixed aperture(this also includes cameras without it at all) and with adjustable.

The aperture can be adjusted in various models of video surveillance cameras:

- Manually.

- Automatically video camera using direct current, based on the amount of light hitting the sensor. This automatic iris adjustment (ADA) is referred to as DD (Direct Drive) or DD/DC.

- Automatically a special module built into the lens and tracking the light flux passing through the relative aperture. This method of ARD in the specifications of video cameras is designated as VD (Video Drive). It is effective even when direct sunlight hits the lens, but surveillance cameras with it are more expensive.

Electronic shutter (AES, shutter speed, shutter speed, shutter)

U different manufacturers This parameter may be referred to as automatic electronic shutter, shutter speed, or shutter speed, but essentially it means the same thing - the time during which light is exposed to the sensor. It is usually expressed as 1/50-1/100000s.

The action of the electronic shutter is somewhat similar to automatic iris adjustment - it adjusts the light sensitivity of the matrix to adjust it to the light level of the room. In the figure below you can see the image quality in low light conditions with different speeds shutter (the picture shows manual adjustment, while AES does it automatically).

Unlike ARD, adjustment occurs not by adjusting the light flux entering the matrix, but by adjusting the shutter speed, the duration of the accumulation of electrical charge on the matrix.

However the capabilities of the electronic shutter are much weaker than automatic iris adjustment, Therefore, in open spaces where the lighting level varies from twilight to bright sunlight, it is better to use cameras with ADS. Video cameras with an electronic shutter are optimal for rooms where the light level changes little over time.

The characteristics of the electronic shutter are not much different from various models. A useful feature is the ability to manually adjust the shutter speed (shutter speed), since in low light conditions low values are automatically set, and this leads to blurred images of moving objects.

Sens-UP (or DSS)

This is a function of accumulating the charge of the matrix depending on the level of illumination, i.e. increasing its sensitivity at the expense of speed. Necessary for shooting high-quality images in low-light conditions, when tracking high-speed events is not critical (there are no fast-moving objects at the object of observation).

It is closely related to the shutter speed (shutter speed) described above. But if the shutter speed is expressed in time units, then Sens-UP is expressed in the shutter speed increase factor (xN): the charge accumulation time (shutter speed) increases by N times.

Permission

We touched on the topic of CCTV camera resolutions a little in the last article. Camera resolution is, in fact, the size of the resulting image. It is measured either in TVL (television lines) or in pixels. The higher the resolution, the more detail you will be able to see in the video.

Video camera resolution in TVL- this is the number of vertical lines (brightness transitions) placed horizontally in the picture. It is considered more accurate because it gives an idea of the size of the output image. While the resolution in megapixels indicated in the manufacturer's documentation can mislead the buyer - it often refers not to the size of the final image, but to the number of pixels on the matrix. In this case, you need to pay attention to such a parameter as “Effective number of pixels”

Resolution in pixels- this is the horizontal and vertical size of the picture (if it is specified as 1280x960) or the total number of pixels in the picture (if it is specified as 1 MP (megapixel), 2 MP, etc.). Actually, the resolution in megapixels is very simple to obtain: you need to multiply the number of horizontal pixels (1280) by the number of vertical pixels (960) and divide by 1,000,000. Total 1280×960 = 1.23 MP.

How to convert TVL to pixels and vice versa? There is no exact conversion formula. To determine the video resolution in TVL, you need to use special test tables for video cameras. For an approximate representation of the ratio, you can use the table:

![]()

Effective pixels

As we said above, often the size in megapixels indicated in the characteristics of video cameras does not give an accurate idea of the resolution of the resulting image. The manufacturer indicates the number of pixels on the camera matrix (sensor), but not all of them are involved in creating the picture.

Therefore, the parameter “Number (number) of effective pixels” was introduced, which shows exactly how many pixels form the final image. Most often it corresponds to the real resolution of the resulting image, although there are exceptions.

IR (infrared) illumination, IR

Allows shooting at night. The capabilities of the matrix (sensor) of a video surveillance camera are much higher than those of the human eye - for example, the camera can “see” in infrared radiation. This property began to be used for filming at night and in unlit/dimly lit rooms. When a certain minimum illumination is reached, the video camera switches to shooting mode in the infrared range and turns on the infrared illumination (IR).

IR LEDs are built into the camera in such a way that the light from them does not fall into the camera lens, but illuminates its viewing angle.

The image obtained in low light conditions using infrared illumination is always black and white. Color cameras that support night photography also switch to black and white mode.

IR illumination values in video cameras are usually given in meters - that is, how many meters from the camera the illumination allows you to get a clear image. Long range IR illumination is called IR illuminator.

What is Smart IR, Smart IR?

Smart IR illumination (Smart IR) allows you to increase or decrease the power of infrared radiation depending on the distance to the object. This is done to ensure that objects that are close to the camera are not overexposed in the video.

IR filter (ICR), day/night mode

The use of infrared illumination for filming at night has one peculiarity: the matrix of such cameras is produced with increased sensitivity to the infrared range. This creates a problem for shooting in the daytime, since the matrix registers the infrared spectrum during the day, which disrupts the normal color of the resulting image.

Therefore, such cameras operate in two modes - day and night. During the day, the matrix is covered with a mechanical infrared filter (ICR), which cuts off infrared radiation. At night, the filter moves, allowing the rays of the infrared spectrum to freely enter the matrix.

Sometimes switching the day/night mode is implemented in software, but this solution produces lower-quality images.

The ICR filter can also be installed in cameras without infrared illumination - to cut off the infrared spectrum in the daytime and improve video color rendition.

If your camera doesn't have an IGR filter because it wasn't originally designed for night photography, you can't add night shooting functionality to it simply by purchasing a separate IR module. In this case, the color of daytime video will be significantly distorted.

Sensitivity (light sensitivity, minimum illumination)

Unlike cameras, where light sensitivity is expressed by the ISO parameter, the light sensitivity of video surveillance cameras is most often expressed in lux (Lux) and means the minimum illumination in which the camera is capable of producing a video image good quality- clear and noise-free. The lower the value of this parameter, the higher the sensitivity.

Video surveillance cameras are selected in accordance with the conditions in which they are planned to be used: for example, if the minimum sensitivity of the camera is 1 lux, then it will not be possible to obtain a clear image at night without additional infrared illumination.

| Conditions | Light level |

| Natural light outside on a cloudless sunny day | over 100,000 lux |

| Natural light outside on a sunny day with light clouds | 70,000 lux |

| Natural light outside in cloudy weather | 20,000 lux |

| Shops, supermarkets: | 750-1500 lux |

| Office or store: | 50-500 lux |

| Hotel halls: | 100-200 lux |

| Vehicle parking, warehouses | 75-30 lux |

| Twilight | 4 lux |

| Well-lit highway at night | 10 lux |

| Spectator seats in the theater: | 3-5 lux |

| Hospital at night, deep twilight | 1 suite |

| Full moon | 0.1 - 0.3 lux |

| Moonlight night (quarter moon) | 0.05 lux |

| Clear moonless night | 0.001 lux |

| Cloudy moonless night | 0.0001 lux |

The signal to noise ratio (S/N) determines the quality of the video signal. Noise in video images is caused by poor lighting and appears as colored or black and white snow or grain.

The parameter is measured in decibels. The picture below shows quite good image quality already at 30 dB, but in modern cameras, to obtain high-quality video, S/N should be at least 40 dB.

DNR Noise Reduction (3D-DNR, 2D-DNR)

Naturally, the problem of noise in video did not go unnoticed by manufacturers. On this moment There are two technologies for reducing noise in the picture and correspondingly improving the image:

- 2-DNR. Older and less advanced technology. Basically, only noise from the near background is removed; in addition, sometimes the image is slightly blurred due to cleaning.

- 3-DNR. Latest technology, which works according to complex algorithm and removes not only nearby noise, but also snow and grain in the distant background.

Frame rate, fps (stream rate)

The frame rate affects the smoothness of the video image - the higher it is, the better. To achieve a smooth picture, a frequency of at least 16-17 frames per second is required. The PAL and SECAM standards support frame rates at 25 fps, and the NTSC standard supports 30 fps. For professional cameras, frame rates can reach up to 120 fps and higher.

However, it must be taken into account that the higher the frame rate, the more space will be required to store video and the more the transmission channel will be loaded.

Light compensation (HLC, BLC, WDR, DWDR)

Common video surveillance problems are:

- individual bright objects falling into the frame (headlights, lamps, lanterns), which illuminate part of the image, and because of which it is impossible to see important details;

- too bright lighting in the background (sunny street behind the doors of the room or outside the window, etc.), against which nearby objects appear too dark.

To solve them, there are several functions (technologies) used in surveillance cameras.

HLC - bright light compensation. Compare:

BLC - backlight compensation. It is implemented by increasing the exposure of the entire image, as a result of which objects in the foreground become lighter, but the background is too light to see details.

WDR (sometimes also called HDR) - wide dynamic range. Also used for backlight compensation, but more effectively than BLC. When using WDR, all objects in the video have approximately the same brightness and clarity, which allows you to see in detail not only the foreground, but also the background. This is achieved due to the fact that the camera takes pictures with different exposures, and then combines them to obtain a frame with optimal brightness of all objects.

D-WDR- software implementation wide dynamic range , which is slightly worse than full-fledged WDR.

Protection classes IK (Vandal-proof, anti-vandal) and IP (from moisture and dust)

This parameter is important if you are choosing a camera for outdoor video surveillance or in a room with high humidity, dust, etc.

IP classes- this is protection against the ingress of foreign objects of various diameters, including dust particles, as well as protection from moisture. ClassesIK- this is anti-vandal protection, i.e. from mechanical impact.

The most common protection classes among outdoor CCTV cameras are IP66, IP67 and IK10.

- Protection class IP66: The camera is completely dustproof and protected from strong water jets (or sea waves). Water gets inside in small quantities and does not interfere with the operation of the video camera.

- Protection class IP67: The camera is completely dustproof and can withstand short-term complete immersion under water or long periods of time under snow.

- Anti-vandal protection class IK10: The camera body will withstand a 5 kg load from a 40 cm height (impact energy 20 J).

Hidden areas (Privacy Mask)

Sometimes it becomes necessary to hide from observation and recording some areas that fall within the camera's field of view. Most often this is due to the protection of privacy. Some camera models allow you to adjust the settings of several of these zones, covering a certain part or parts of the image.

For example, in the picture below, the windows of a neighboring house are hidden in the camera image.

Other functions of CCTV cameras (DIS, AGC, AWB, etc.)

OSD menu- opportunity manual settings many camera parameters: exposure, brightness, focal length (if there is such an option), etc.

- shooting in low light conditions without infrared illumination.

DIS- camera image stabilization function when shooting in vibration or motion conditions

EXIR Technology- infrared illumination technology developed by Hikvision. Thanks to it, greater backlight efficiency is achieved: greater range with less power consumption, dispersion, etc.

AWB- automatic adjustment of the white balance in the image, so that the color rendition is as close as possible to natural, visible to the human eye. Particularly relevant for rooms with artificial lighting and various light sources.

AGC (AGC)- automatic gain control. It is used to ensure that the output video stream from cameras is always stable, regardless of the strength of the input video stream. Most often, amplification of the video signal is required in low light conditions, and a decrease - on the contrary, when the lighting is too strong.

Motion Detector- thanks to this function, the camera can turn on and record only when there is movement on the object being monitored, and also transmit an alarm signal when the detector is triggered. This helps save space for storing video on the DVR, relieves the load on the video stream transmission channel, and organizes notification of personnel about a violation that has occurred.

Camera alarm input- this is the ability to turn on the camera and start recording video when any event occurs: the activation of a connected motion sensor or another sensor connected to it.

Alarm output allows you to trigger a reaction to an alarm event recorded by the camera, for example, turn on the siren, send an alert by mail or SMS, etc.

Didn't find the feature you were looking for?

We tried to collect all the frequently encountered characteristics of video surveillance cameras. If you did not find an explanation of some parameter here that is unclear to you, write in the comments, we will try to add this information to the article.

website

We wrote about matrices about choosing a video camera for a family. There we touched on this issue easily, but today we will try to describe both technologies in more detail.

What is the matrix in a video camera? This is a microcircuit that converts a light signal into an electrical signal. Today there are 2 technologies, that is 2 types of matrices - CCD (CCD) and CMOS (CMOS). They are different from each other, each has its own pros and cons. It is impossible to say for sure which one is better and which one is worse. They develop in parallel. We will not go into technical details, because... they will be tritely incomprehensible, but in general terms we will define their main pros and cons.

CMOS technology (CMOS)

CMOS matrices First of all, they boast about low power consumption, which is a plus. A video camera with this technology will work a little longer (depending on the battery capacity). But these are minor things.

The main difference and advantage is the random reading of cells (in CCD reading is carried out simultaneously), which eliminates smearing of the picture. Have you ever seen “vertical pillars of light” from point-like bright objects? So CMOS matrices exclude the possibility of their appearance. And cameras based on them are cheaper.

There are also disadvantages. The first of them is the small size of the photosensitive element (in relation to the pixel size). Here, most of the pixel area is occupied by electronics, so the area of the photosensitive element is reduced. Consequently, the sensitivity of the matrix decreases.

Because Since electronic processing is carried out on the pixel, the amount of noise in the picture increases. This is also a disadvantage, as is the low scanning time. Because of this, a “rolling shutter” effect occurs: when the operator moves, the object in the frame may be distorted.

CCD technology

Video cameras with CCD matrices allow you to obtain high-quality images. Visually, it is easy to notice less noise in video captured with a CCD-based camcorder compared to video captured with a CMOS camera. This is the very first and most important advantage. And one more thing: the efficiency of CCD matrices is simply amazing: the fill factor is approaching 100%, the ratio of registered photons is 95%. Take the ordinary human eye - here the ratio is approximately 1%.

High price and high energy consumption are the disadvantages of these matrices. The thing is, the recording process here is incredibly difficult. Image capture is carried out thanks to many additional mechanisms that are not found in CMOS matrices, which is why CCD technology is significantly more expensive.

CCD matrices are used in devices that require color and high-quality images, and which may be used to shoot dynamic scenes. These are mostly professional video cameras, although there are household ones too. These are also surveillance systems, digital cameras, etc.

CMOS matrices are used where there are no particularly high requirements for picture quality: motion sensors, inexpensive smartphones... However, this was the case before. Modern matrices CMOS have different modifications, which makes them very high quality and worthy from the point of view of competing with CCD matrices.

Now it is difficult to judge which technology is better, because both demonstrate excellent results. Therefore, setting the type of matrix as the only selection criterion is, at a minimum, stupid. It is important to take many characteristics into account.

Please rate the article:

For the first time, the CCD principle with the idea of storing and then reading electronic charges was developed by two engineers from the BELL Corporation in the late 60s during the search for new types of computer memory that could replace memory with ferrite rings(yes, yes, there was such a memory). This idea turned out to be unpromising, but the ability of silicon to respond to the visible spectrum of radiation was noticed and the idea of using this principle for image processing was developed.

Let's start with deciphering the term.

The abbreviation CCD means “Charge-Coupled Devices” - this term is derived from the English “Charge-Coupled Devices” (CCD).

This type of device currently has a very wide range of applications in a wide variety of optoelectronic devices for image recording. In everyday life these are digital cameras, video cameras, and various scanners.

What distinguishes a CCD receiver from a conventional semiconductor photodiode, which has a photosensitive pad and two electrical contacts for picking up an electrical signal?

Firstly, such photosensitive areas (often called pixels - elements that receive light and convert it into electric charges) in a CCD receiver is very large, from several thousand to several hundred thousand and even several million. The sizes of individual pixels are the same and can range from units to tens of microns. The pixels can be lined up in one row - then the receiver is called a CCD array, or they can fill an area of the surface in even rows - then the receiver is called a CCD matrix.

Location of light-receiving elements (blue rectangles) in the CCD array and CCD matrix.

Secondly, in a CCD receiver, which looks like a regular microcircuit, there is no huge number of electrical contacts for outputting electrical signals, which, it would seem, should come from each light-receiving element. But an electronic circuit is connected to the CCD receiver, which makes it possible to extract from each photosensitive element an electrical signal proportional to its illumination.

The operation of a CCD can be described as follows: each light-sensitive element - a pixel - works like a piggy bank for electrons. Electrons are created in pixels under the influence of light coming from a source. Over a given period of time, each pixel is gradually filled with electrons in proportion to the amount of light entering it, like a bucket placed outside during rain. At the end of this time, the electrical charges accumulated by each pixel are transferred in turn to the “output” of the device and measured. All this is possible due to the specific crystal structure where the light-sensitive elements are located, and electrical diagram management.

A CCD matrix works almost exactly the same way. After exposure (illumination of the projected image), the electronic control circuit of the device supplies it with a complex set of pulse voltages, which begin to shift the columns with electrons accumulated in the pixels to the edge of the matrix, where a similar measuring CCD register is located, the charges in which are shifted in the perpendicular direction and fall onto the measuring element, creating signals in it that are proportional to the individual charges. Thus, for each subsequent moment in time we can obtain the value of the accumulated charge and figure out which pixel on the matrix (row number and column number) it corresponds to.

Briefly about the physics of the process.

To begin with, we note that CCDs belong to the products of so-called functional electronics. They cannot be imagined as a collection of individual radio elements - transistors, resistors and capacitors. The operation is based on the principle of charge coupling. The principle of charge coupling uses two provisions known from electrostatics:

- like charges repel each other

- charges tend to settle where their potential energy is minimal. Those. roughly - “the fish looks where it’s deeper.”

First, let's imagine a MOS capacitor (MOS is an abbreviation for metal-oxide-semiconductor). This is what remains of the MOS transistor if you remove the drain and source from it, that is, just an electrode separated from the silicon by a dielectric layer. For definiteness, we will assume that the semiconductor is p-type, i.e., the concentration of holes under equilibrium conditions is much (several orders of magnitude) greater than that of electrons. In electrophysics, a “hole” is the charge that is opposite to the charge of an electron, i.e. positive charge.

What happens if a positive potential is applied to such an electrode (it is called a gate)? The electric field created by the gate, penetrating into the silicon through the dielectric, repels moving holes; a depletion region appears - a certain volume of silicon free from majority carriers. With parameters of semiconductor substrates typical for CCDs, the depth of this region is about 5 μm. On the contrary, electrons generated here under the influence of light will be attracted to the gate and will accumulate at the oxide-silicon interface directly below the gate, i.e., they will fall into the potential well (Fig. 1).

Rice. 1

Formation of a potential well when voltage is applied to the gate

In this case, electrons, as they accumulate in the well, partially neutralize the electric field created in the semiconductor by the gate, and in the end can completely compensate for it, so that the entire electric field will fall only on the dielectric, and everything will return to the initial state- with the exception that a thin layer of electrons is formed at the interface.

Now let another gate be located next to the gate, and a positive potential is also applied to it, moreover, greater than to the first one (Fig. 2). If only the gates are close enough, their potential wells are combined, and the electrons in one potential well move to the neighboring one, if it is “deeper”.

Rice. 2

Overlapping potential wells of two closely located gates. The charge flows to the place where the potential well is deeper.

Now it should be clear that if we have a chain of gates, then it is possible, by applying appropriate control voltages to them, to transmit a localized charge packet along such a structure. A remarkable property of CCDs - the self-scanning property - is that to control a chain of gates of any length, only three clock lines are sufficient. (The term bus in electronics is a conductor electric current, connecting elements of the same type, the clock bus is the conductors through which a phase-shifted voltage is transmitted.) Indeed, for the transmission of charge packets, three electrodes are necessary and sufficient: one transmitting, one receiving and one insulating, separating pairs of receiving and transmitting from each other, and of the same name the electrodes of such triplets can be connected to each other into a single clock bus, requiring only one external output (Fig. 3).

Rice. 3

The simplest three-phase CCD register.

The charge in each potential well is different.

This is the simplest three-phase shift register on a CCD. Clock diagrams of the operation of such a register are shown in Fig. 4.

Rice. 4

Clock diagrams for controlling a three-phase register are three meanders shifted by 120 degrees.

When potentials change, charges move.

It can be seen that for its normal operation at each moment of time, at least one clock bus must have a high potential, and at least one must have a low potential (barrier potential). When the potential increases on one bus and decreases it on the other (previous), all charge packets are simultaneously transferred to adjacent gates, and for a full cycle (one cycle on each phase bus), charge packets are transferred (shifted) to one register element.

To localize charge packets in the transverse direction, so-called stop channels are formed - narrow strips with an increased concentration of the main dopant, running along the transfer channel (Fig. 5).

Rice. 5.

Top view of the register.

The transfer channel in the lateral direction is limited by stop channels.

The fact is that the concentration of the doping impurity determines at what specific gate voltage a depletion region is formed under it (this parameter is nothing more than the threshold voltage of the MOS structure). From intuitive considerations, it is clear that the higher the impurity concentration, i.e., the more holes in the semiconductor, the more difficult it is to drive them deeper, i.e., the higher the threshold voltage or, at one voltage, the lower the potential in the potential well.

Problems

If in the production of digital devices the scatter of parameters across the wafer can reach several times without a noticeable effect on the parameters of the resulting devices (since the work is done with discrete voltage levels), then in a CCD a change in, say, the dopant concentration by 10% is already noticeable in the image. The size of the crystal and the impossibility of redundancy, as in LSI memory, add its own problems, so that defective areas lead to the unusability of the entire crystal.

Bottom line

Different pixels of the CCD matrix technologically have different sensitivity to light and this difference must be corrected.

In digital KMA this correction is called the Auto Gain Control (AGC) system.

How the AGC system works

For simplicity of consideration, we will not take anything specific. Let's assume that there are certain potential levels at the output of the ADC of the CCD node. Let's assume that 60 is the average level of white.

- For each pixel of the CCD line, a value is read when it is illuminated with reference white light (and in more serious devices, the “black level” is also read).

- The value is compared to a reference level (for example, the average).

- The difference between the output value and the reference level is stored for each pixel.

- Later, during scanning, this difference is compensated for each pixel.

The AGC system is initialized each time the scanner system is initialized. You probably noticed that when you turn on the machine, after some time the scanner carriage begins to make forward-return movements (crawling along the black and white stripes). This is the AGC system initialization process. The system also takes into account the condition of the lamp (aging).

You also probably noticed that small MFPs equipped with a color scanner “light the lamp” with three colors in turn: red, blue and green. Then only the original backlight turns white. This is done to better correct the sensitivity of the matrix separately for RGB channels.

Halftone test (SHADING TEST) allows you to initiate this procedure at the request of the engineer and bring the adjustment values to real conditions.

Let's try to consider all this on a real, “combat” machine. Let's take a well-known and popular device as a basis. SAMSUNG SCX-4521 (Xerox Pe 220).

It should be noted that in our case, CCD becomes CIS (Contact Image Sensor), but the essence of what is happening does not fundamentally change. Simply, lines of LEDs are used as a light source.

So:

The image signal from the CIS has a level of about 1.2 V and is supplied to the ADC section (SADC) of the device controller (SADC). After SADC, the analog CIS signal will be converted into an 8-bit digital signal.

The image processor in SADC first uses the tone correction function and then the gamma correction function. After this, the data is supplied to various modules according to the operating mode. In Text mode, image data goes to the LAT module, in Photo mode, image data goes to the "Error Diffusion" module, in PC-Scan mode, image data goes directly to Personal Computer via DMA access.

Before testing, place several blank sheets of white paper on the exposure glass. It goes without saying that the optics, b/w stripe and, in general, the scanner assembly from the inside must first be “licked”

- Select in TECH MODE

- Press the ENTER button to scan the image.

- After scanning, a "CIS SHADING PROFILE" will be printed. An example of such a sheet is shown below. It doesn’t have to be a copy of your result, but it should be close in image.

- If the printed image is very different from the image shown in the illustration, the CIS is faulty. Please note that at the bottom of the report sheet it says “Results: OK”. This means that the system has no serious complaints about the CIS module. Otherwise error results will be given.

Example of a profile printout:

Good luck to you!!

Based on materials from articles and lectures by teachers of St. Petersburg State University (LSU), St. Petersburg Electrotechnical University (LETI) and Axl. Thanks to them.

Material prepared by V. Schelenberg